This piece was originally published at Works in Progress.

In 1929, Bell Labs was frustrated by persistent electrical interference on its transatlantic wireless telephone link. The system had launched two years earlier on the staggeringly low frequency of 60 kHz. After an upgrade to operate between 10 and 20 MHz, electrical disturbances still plagued phone calls between New York and London, which cost $25 per minute. The company assigned a young radio engineer named Karl Jansky to investigate the causes of the interference.

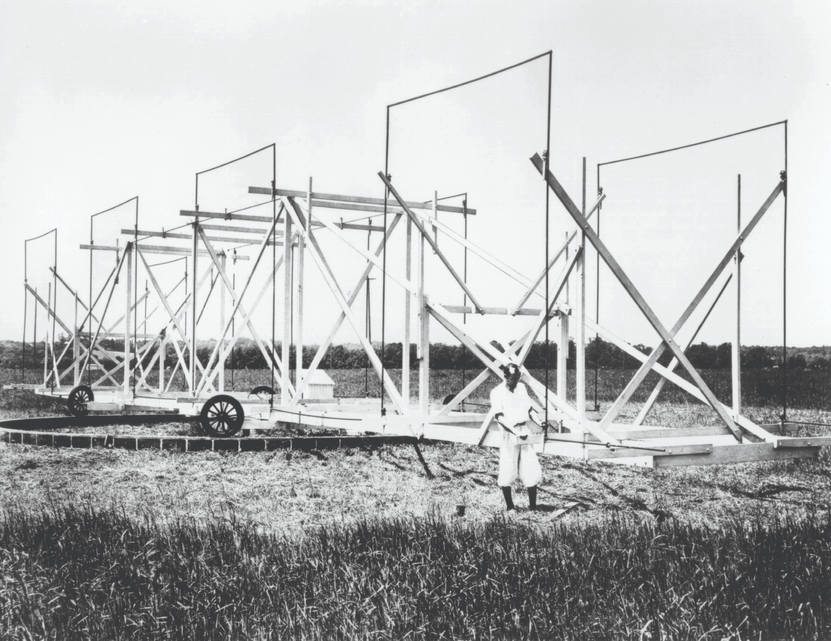

Jansky got to work. He built a 100-foot rotating antenna, nicknamed “Jansky’s merry-go-round,” at Bell Labs’s complex at Holmdel, New Jersey, and started taking regular observations. By mid-1932, he determined that the interference could be explained by the combination of static from local and distant thunderstorms and “a very steady hiss type static the origin of which is not yet known.” Jansky thought this mysterious hiss was related to the position of the sun. The signal rose and fell on an approximately 24-hour cycle, with the deviation from the solar day possibly accounted for by seasonal changes in the latitude of the sun.

Jansky’s Merry-go-round. Credit: NRAO/AUI/NSF

After a few more months of observations, Janksy proved this idea spectacularly wrong. The deviation from the 24-hour cycle was not seasonal but fixed. The signal rose and fell every 23 hours and 56.06 minutes. The missing 4 minutes is the difference between a solar day—the time it takes for the Earth to rotate with respect to the sun—and a sidereal day—the time it takes Earth to rotate with respect to the distant stars. The hiss, therefore, wasn’t due to the sun. It was coming from the Milky Way. 324 years after Galileo first lifted a spyglass to the heavens, radio astronomy was serendipitously born.

Although the discovery of transmissions from the Milky Way was celebrated on the front page of the New York Times, no one initially knew what to do with the information. Astronomers did not know radio engineering, and radio engineers did not know astronomy; there was a Great Depression raging; Bell Labs could find no commercial purpose to continue Jansky’s research; war soon broke out. Radio astronomy languished for over a decade, but finally began to substantially progress after World War II, when surplus radar equipment was scrounged and repurposed for scientific inquiry, measuring radio emissions from distant celestial objects. Stunning new discoveries were made with every advance in equipment, each one belying the folk notion of the universe as a still, lifeless void.

The sun, it was quickly discovered, is alive with fluctuations both in its core and on its surface, the latter resulting in eruptions of solar plasma. Quasars—galactic nuclei that release astounding levels of energy as matter falls into their supermassive black holes—were discovered in the late 1950s. Pulsars—collapsed stars that, due to conservation of angular momentum, rotate at periods on the order of milliseconds—were observed in 1967, although it is still a puzzle how pulsars can be so common and the supernovae from which we think they are born can appear so rare. Interstellar space, far from being a perfect vacuum, is home to 10% of the Milky Way’s mass, mostly in the form of hydrogen gas, but also with detectable levels of hundreds of organic molecules, including amino acids and simple sugars. Active galactic nuclei emit jets of gas at relativistic speeds over distances measured in the millions of light-years. Our most powerful telescopes have seen photons that have travelled for 13 billion years, originating when the universe was barely formed.

Despite occasional flurries of headlines—such as when imaging scientist Katie Bouman first glimpsed her picture of a supermassive black hole 6.5 billion times the mass of the sun—radio astronomers have labored in relative obscurity, competing for modest government funding against other basic scientific endeavors. In addition, radio astronomy has always been hampered by two fundamental limitations.

The first limitation is the resolution with which radio telescopes can observe the sky. The larger the diameter of the receiving dish, the better the resolution of the telescope. Unfortunately, it is not possible to make an arbitrarily large dish—at some point the structure would collapse under its own weight. China’s Five-hundred-meter Aperture Spherical radio Telescope (FAST) is the world’s largest single filled-aperture radio telescope. Although the dish itself is 500 meters in diameter as the name implies, the effective aperture is only 300 meters because the feed antenna can only see that much of the dish. With better materials, it’s possible a bigger dish could be built, but we are close to structural limits on dish size.

Scientists have worked around this limitation with what is known as aperture synthesis. Instead of using one very large dish to collect radio waves, data from a number of radio telescopes (called an array) can be stitched together by computers into a coherent single observation. These telescopes can be located at a single site, or they can be separated by oceans. The Event Horizon Telescope (EHT), the instrument that Bouman and colleagues used to image the black hole, is actually a network of telescopes in Europe, North America, South America, Antarctica, and Hawaii. The resolution of the array is proportional not to the diameter of any one instrument, but rather to the distance between those instruments that are farthest apart. The EHT’s black hole measurement was made at a stunning resolution of 25 microarcseconds, roughly the capability from Earth to distinguish a golf ball on the moon.

The second fundamental limitation is due to interference from the atmosphere and human activity. Earth’s atmosphere is mostly transparent to visible light and to radio wavelengths of a few centimeters to a few meters. The microwaves and infrared waves between these two windows cannot usually make it all the way through to the ground due to water and other molecules in the atmosphere. Observing these wavelengths, as well as shorter waves like ultraviolet, X-rays, and gamma rays, has meant locating telescopes in unusual locations. Wavelengths above 30 meters (frequencies below 10 MHz) are also blocked by the atmosphere and remain almost completely unexplored.

The Atacama Large Millimeter Array is one of the unusually placed instruments suited to capturing frequencies that don’t usually reach the ground, located on the high Chajnantor plain in Chile’s Atacama desert. At over 16,500 feet of altitude, it is the driest place on Earth, and so remote that it avoids much human-produced radio interference. ALMA is the world’s most powerful single-site millimeter-band instrument, achieving a resolution of 10 milliarcseconds. With neither water vapor nor dense sea-level atmosphere nor human transmissions to contend with, ALMA’s unique location demonstrates the determination with which scientists have worked around the limitations imposed by Earth’s atmosphere. When possible markers of life on Venus were discovered in September, it was ALMA that provided the most conclusive evidence.

Antennas of the Atacama Large Millimeter/submillimeter Array (ALMA), on the Chajnantor Plateau. Credit: ESO/C. Malin

To view certain bands, however, you really have to be in space. Space telescopes are incredible instruments. NASA’s most famous, the Hubble Space Telescope, has made numerous significant discoveries since it entered service in 1990, most famously estimating the age of the universe at 13.7 billion years, two orders of magnitude more precisely than the previous scientific estimate of 10 to 20 billion years. But Hubble operates mainly in the optical band, something that is mostly accessible from Earth. NASA’s less famous infrared instrument, the Spitzer Space Telescope, which was deactivated this year after tripling its planned design life, studied bands not observable from the ground. Its replacement, the powerful James Webb Space Telescope, is due to launch next year. It should produce even more stunning observations than Hubble when it comes online, as its sensitivity to infrared light is perfect for capturing optical waves, redshifted by the expansion of the cosmos, from some of the most distant objects in the observable universe.

But the biggest problem with these orbiting telescopes is that they cannot avail themselves of the solution used by terrestrial arrays to increase resolution—adding more telescopes and stitching the data together using computation. James Webb’s aperture is 6.5 meters in diameter, while the Event Horizon Telescope has an effective aperture the size of Earth. Space telescopes lack the power that arrays on the ground can achieve.

Pillars of Creation in the Eagle Nebula. Credit: NASA, ESA and the Hubble Heritage Team (STScI/AURA)

Astronomy, then, faces a Catch-22. Terrestrial telescopes can be built with excellent resolution thanks to aperture synthesis, but they have to cope with atmospheric interference that limits access to certain bands, as well as radio interference from human activity. Space telescopes don’t experience atmospheric interference, but they cannot benefit from aperture synthesis to boost resolution. What we need is to develop a telescope array that can marry the benefits of both: a large synthetic aperture like Earth-based arrays that is free from atmospheric and human radio interference like space telescopes.

A telescope array on the surface of the moon is the only solution. The moon has no atmosphere. Its far side is shielded from light and radio chatter coming from Earth. The far side’s ground is stable, with little tectonic activity, an important consideration for the ultra-precise positioning needed for some wavelengths. Turning the moon into a gigantic astronomical observatory would open a floodgate of scientific discoveries. There are small telescopes on the moon today, left behind from Apollo 16 and China’s Chang’e 3 mission. A full-on terrestrial-style far-side telescope array, however, is in a different class of instrument. Putting one (or more) on the moon would have cost exorbitant sums only a few years ago, but thanks to recent advances in launch capabilities and cost-reducing competition in the new commercial space industry, it is now well worth doing—particularly if NASA leverages private-sector innovation.

Past space megaprojects have not been cheap. Until it retired in 2011, the Space Shuttle was NASA’s workhorse vehicle. It flew 135 missions over its 30-year career, each at a staggering cost of $1.8 billion in today’s dollars. In its last decade, the Shuttle delivered most of the modules of the International Space Station, which has a mass of 420,000 kilograms, at a cost of $65,400 per kilogram, again adjusted for inflation. In contrast, SpaceX’s Falcon 9 can deliver cargo to low Earth orbit for only $2,600/kg on a commercial basis. Falcon Heavy is even more efficient at $1,500/kg. The next few years will only improve matters on the launch front, with Blue Origin’s heavy-lift New Glenn rocket entering service next year, followed by SpaceX’s super-heavy-lift Starship and Blue Origin’s mysterious New Armstrong (given the name, presumably a moon rocket).

International Space Station and space shuttle. Credit: NASA

Expensive launches are not the only factors that have doomed space projects to high costs. After all, even at the Space Shuttle’s exorbitant per-kg launch cost, launch could only account for a fraction of the International Space Station’s $150-billion price tag. Cost-plus contracts given to military contractors have led to enormous engineering budget overruns, with NASA’s James Webb Space Telescope as the poster child for this phenomenon. At its inception in 1997, the program was supposed to cost only $500 million, with entry into service scheduled for 2007. 23 years later, total costs are over $10 billion, and with luck, the telescope will launch next year. For these overruns and delays, we can thank a cost-plus contract with Northrop Grumman. Similar delays and cost overruns have plagued NASA’s next-generation non-commercial super-heavy launch program, the Space Launch System, which is so useless that it could be retired before it even enters service.

To the maximum extent possible, then, a lunar telescope array should be developed and deployed on a commercial, fixed-price basis. We will eventually need such arrays that operate over all wavelengths, but the first priority should be long waves (low frequencies) above 30 meters (below 10 MHz), which remain unstudied, constituting a striking gap in our scientific knowledge. These waves, which include redshifted signals from the dawn of the cosmos, cannot penetrate the atmosphere, so they cannot be studied from the ground. In these frequencies, space telescopes cannot be adequately shielded from Earth’s radio noise. Perhaps the only place in the solar system where waves above 30 meters can be studied is on the far side of the moon, where the moon itself blocks Earth’s chatter.

There are two recent proposals to study these low-frequency signals on the moon. The latest, called the Lunar Crater Radio Telescope, was recently selected for a Phase 1 award from NASA and has not yet been thoroughly studied. It would create a 1-km diameter dish by using rovers to suspend wires in a lunar crater, becoming the largest single-dish telescope in the solar system. The more developed plan is FARSIDE, which stands for Farside Array for Radio Science Investigations of the Dark ages and Exoplanets. As the extended name suggests, its two primary missions would be to study the cosmological Dark Ages, the period in the history of the universe before stars ignited, as well as planets outside the solar system.

The Dark Ages are of interest because they can provide clear bounds for cosmology itself. Before stars were formed, the primary source of radio waves in the universe was molecular hydrogen. FARSIDE would be able to observe these radio waves, redshifted by the expansion of the universe to a much lower frequency, and determine whether expansion of the early universe followed the standard, adiabatic cosmological model, where temperature scales in inverse proportion with volume, or whether it featured so-called “excess cooling,” which would be a sign of exotic physics. This in turn will allow scientists to make inferences about the nature of dark matter.

Closer to home in both time and space, FARSIDE would study the magnetic fields of rocky planets within 33 light-years of Earth as well as the space weather in their vicinity. A planet’s magnetic field, called its magnetosphere, is critical for retaining its atmosphere in the face of stellar wind, made up of particles streaming from the planet’s star. Without a magnetosphere, a planet’s atmosphere would literally get blown away. FARSIDE’s exoplanet line of research would help us understand this dependence by studying a variety of planets with, presumably, a variety of magnetic field strengths and space weather environments.

The exoplanet study—it is unstated in the FARSIDE’s final report—has implications for the search for extraterrestrial intelligence. It would let us assess the degree to which rocky planet magnetospheres and atmospheres are common or rare, helping us pin down an estimate for the number of planets per star that could support life. Great Filter theorists would use the information to update their forecasts of success for the human race. We do not observe extraterrestrial civilizations, the theorists say, either because they develop only rarely or because they quickly destroy themselves. If magnetospheres are common, their absence can’t be why we don’t observe other industrial civilizations, suggesting there is another, possibly more ominous, reason.

FARSIDE’s exoplanet research could also allow us to make more direct inferences about the prevalence of industrial civilizations in the cosmos. Suppose the program were to discover that rocky planets with magnetospheres capable of retaining an atmosphere in the face of stellar wind were rare, but that in a handful of star systems, all rocky planets of significant size have nice magnetospheres and atmospheres. These few star systems would instantly become candidates for the existence of terraforming civilizations. After all, when humans start terraforming Mars, one of the first things we will do is create a new magnetosphere and build up the atmosphere. A star system with a statistically improbable number of planets protected from stellar wind would be a sign that another industrial civilization lives there and has already spread to all of the system’s planets.

The proposed cost of FARSIDE, including both the Dark Ages and the exoplanet research, is around $1.3 billion, counting NASA’s customary 30% percent reserves. The project gets to such a low number by leveraging commercially available space technologies. Launch is projected to cost $150 million, which may actually be high if Starship meets its goals. FARSIDE’s lander is based on Blue Origin’s commercial Blue Moon vehicle. Provided that the program is able to proceed on this commercial, fixed-price basis, it could represent a remarkable advance for fundamental physics, astronomy, and astrobiology. For less than the cost of a single Space Shuttle launch, we can unlock a part of the cosmos upon which no human has ever gazed.

In any case, both FARSIDE and LCRT should be considered first steps. In the long run, we will need bigger, more powerful arrays on the moon, capable of outperforming our existing telescopes at every frequency. Radio astronomy has always been—from Jansky’s initial discovery—marked by serendipity. Some have already drawn conclusions about our place in the universe from its apparent silence, but the truth is that we have scarcely begun to listen. What wondrous secrets will more powerful instruments serendipitously unlock?

Works in progress

Works in progress