Introduction

Social media allows individuals to connect directly with people and organizations from around the globe to share ideas and information. This decentralized approach can enhance social welfare by aggregating knowledge from many sources. At the same time, conflicting interests and objectives may give rise to false or misleading information. The prevalence and negative effects of such misinformation have received significant attention in the popular and academic press. According to a recent survey from the Pew Research Center, most Americans view social media as having “a mostly negative effect on the way things are going in the US today,” especially because of misinformation.1https://www.pewresearch.org/fact-tank/2020/10/15/64-of-americans-say-social-media-have-a-mostly-negative-effect-on-theway-things-are-going-in-the-u-s-today/.

Given these concerns, policies aimed at effectively combating misinformation are a topic of popular debate. Can content moderation policies curb misinformation while retaining the broader benefits of social media? What protocols are best suited to detect misinformation, and what consequences should purveyors of misinformation face? To determine whether or not content moderation is effective, we need clean and objective measures of the extent of misinformation as well as how it affects social outcomes. Such measures are not typically available since even reaching a consensus on whether content contains misinformation is contentious. Further, measuring how misinformation affects real-world events is empirically challenging. This is particularly true when assessing the quality of electoral outcomes in the face of the increasing partisan divide.

To address these challenges, we turn to a laboratory experiment. Crucially, our controlled experiment allows us to directly measure outcomes that are unobservable in the real world. We can accurately measure misinformation, objectively determine the quality of voting outcomes, and causally assess the performance of a variety of content moderation policies.

In each period of our experiment, there is an unknown and binary state of the world. Individuals vote for one of the possible states, and each subject receives a fixed payoff if the voting outcome matches the state. In addition, each subject receives a partisan payoff that depends on their type and the voting outcome but is independent of the state. Before voting, each individual decides how much information to purchase about the state.2There is a large literature that studies endogenous information acquisition in voting games. See, for example, Elbittar et al. (2016), Grosser and Seebauer (2016), Großer (2018), and Meyer and Rentschler (2022). In addition, subjects choose which group members to follow on a simulated social media platform, where they can share a (potentially false) post about the information they purchased and see the posts of those they follow.

We consider six content moderation policies on the social media platform. These policies vary the way posts are monitored (a monitoring protocol) and the consequence imposed when misinformation is detected (a consequence). In all content moderation policies, fact-checks perfectly detect misinformation, and the result of each fact-check is automatically added to the corresponding post.

We also consider three monitoring protocols. The first is peer-to-peer fact-checking (P2P), in which each subject can pay a small cost to fact-check a given post. The second method is platform fact-checking (PL), where the social media platform fact-checks each post with 20 percent probability. We also consider the combination of P2P and PL fact-checking (P2P+PL). The monitoring protocol is varied on a within-subject basis. In stage one of the experiment, subjects face either P2P, PL, or P2P+PL; in the second, they face another monitoring protocol.3The order of monitoring protocols methods is balanced across sessions to control for order effects.

In addition, we consider two consequences that are imposed on purveyors of misinformation and vary these on a between-subject basis. In the first, fact-checked posts are flagged and report the results of a fact-check, whether it is true or false (FL). In the second, in addition to flagging, a subject who posted misinformation is subject to persistent scrutiny (PS) for the next two periods, wherein the computer automatically checks their posts (FL+PS).

To evaluate the performance of these content moderation policies, we use data from this experiment as well as data reported in Pascarella et al. (2022).4Pascarella et al. (2022) study how social media affects voting outcomes in an identical experimental environment but without content moderation. The data for Pascarella et al. (2022) and the current design were collected at ExCEN Laboratory at Georgia State University between February and March of 2020. As such, our data are directly comparable, and we use the data of Pascarella et al. (2022) as benchmarks for our analysis. The data for our experiment and Pascarella et al. (2022) were collected in separate sessions; no subject participated in a session for both studies. The recruitment methods, software, and instructions were the same across the studies except for the inclusion of content moderation. The experimenter running the session was also the same. The data from Pascarella et al. (2022) allow for causal comparison to baselines without any social media (NONE), with social media but without the potential for misinformation (TR), and with social media but without any content moderation (MIS). This allows us to assess whether content moderation policies can effectively address misinformation and preserve the benefits of social media.

We first consider how different content moderation policies affect the level of misinformation and then assess the relative performance of different content moderation policies as measured by the quality of voting outcomes. To account for the costs of fact-checking imposed on subjects, as well as the costs of acquiring information, we also consider the welfare of subjects. Finally, we assess the problem from the perspective of a social media platform that is interested in increasing user engagement.

Our results are striking. We find that all content moderation policies studied reduce the level of misinformation. The percentage of posts containing misinformation is lowest when individuals have the opportunity to monitor posts and when the platform monitors posts. Interestingly, adding persistent scrutiny of those caught posting misinformation does not decrease misinformation relative to the case with just flagging. We also find that both group decisions and welfare are improved when peer-to-peer monitoring is available relative to when there is no moderation. Platform fact-checking has more mixed results: it does not improve group decisions relative to the baseline with no moderation and improves welfare only in the case with persistent scrutiny.

Related Literature

This study is most closely related to Pascarella et al. (2022), who consider three environments that serve as baselines for our paper. In the first, there is no social media platform for subjects to share information on. In the second, there is social media but no misinformation. In the third, there is social media, misinformation is possible, and there is no content moderation. The results reported in Pascarella et al. (2022) show that the presence of a social media platform can improve voting outcomes provided that misinformation is not permitted. In their experiment, when misinformation is permitted, 41 percent of all posts contain misinformation, which decreases group welfare.

Relatively few papers have studied the effects of different content moderation policies. The main focus has been on how individuals detect misinformation and how it influences their beliefs and self-reported decisions. Jun et al. (2017) find that individuals are less likely to fact-check statements when they believe that the fact-check may be completed by other individuals. Nieminen and Rapeli (2019) summarize studies on how individuals change their beliefs based on fact-checks as well as how the profession of fact-checking has evolved. Their review finds that misinformation affects partisan issues differently than nonpartisan ones. Barrera et al. (2020) study an election in France and find that fact-checking statements by political candidates can improve the accuracy of the voter beliefs but do not influence their decision to vote for a candidate. Nyhan et al. (2019) study the 2016 US election and find that journalistic fact-checks can reduce misperceptions but have minimal effects on candidate evaluations or vote choice.

The literature studying voting games in the laboratory finds that the quality of group outcomes improves when there is an option to communicate and information is available at no cost to the participants (Guarnaschelli et al., 2000, Goeree and Yariv, 2011, Le Quement, 2019, Pogorelskiy and Shum, 2019). This result is robust even in when partisans have an incentive to misrepresent information (Goeree and Yariv, 2011). Le Quement (2019) also finds that communication leads to improved outcomes when there are diverse preferences, though some subjects do produce misinformation.

In a related working paper, Pogorelskiy and Shum (2019) find that individuals are more likely to share signals that are in line with their partisan bias and to put more stock in private signals that lean in the direction of their partisan bias. Additionally, social media improves group outcomes only if the signals voters receive are unbiased. When voters receive biased signals, social media worsens group decisions relative to if there were no social media.

Experimental Design

In each experimental session, 15 subjects participate in two stages. At the beginning of each stage, the subjects are randomly assigned to groups of five. Each stage consists of a number of rounds, and groups are fixed within a stage. In both stages, there are five rounds with certainty, with a 90 percent continuation probability for each additional round.5The number of rounds is prerandomized for all groups. Groups interact in 17 rounds in the first stage and 18 rounds in the second stage. Subjects are only informed of the continuation probability. One of the treatment variables (monitoring protocols) in our experiment is varied on a within-subject basis, with subjects seeing one treatment in the first stage and another in the second stage.6Note that having two stages in our experiment effectively doubles the number of groups in our data and allows us to balance for any potential ordering effects.

At the start of each stage, each subject within a group is randomly assigned an ID from the set {A,B,C,D,E} and a type. Each subject’s type, , is an independent and identically distributed draw from a commonly known, discrete uniform distribution with support {0, 1, … , 99, 100}. Each subject’s type is private information, whereas IDs are common knowledge. Subject IDs and types are fixed within a stage.

In each round, there are two possible states of the world, brown or purple. Each state is equally likely, and the state of the world is determined randomly each round and is unknown to subjects. Groups choose either brown or purple via majority vote, and abstention is not permitted.

Each subject receives a fixed payoff of 50 experimental francs (EF) if the group votes for the true state of the world.7The subjects’ earnings, denominated in EF, are converted back to USD at 145𝐸𝐹 = $1. In addition, each receives a partisan payoff: if the group votes for brown, the subject’s partisan payoff is EF, and if the group votes for purple, the subject’s partisan payoff is (100 −

) EF. Notice that as

increases (decreases), so does a subject’s partisan preference for brown (purple). These partisan preferences are independent of the state of the world and vary from moderate to extreme.8Robbett and Matthews (2018) find that individuals are more likely to give partisan responses in a voting scenario relative to when they are the decision-maker for a policy. They also find that free access to information reduces the partisan gap in outcomes. When information is costly, individuals do not purchase information and vote according to their partisan preferences.

Before voting, each subject can purchase up to nine units of information. If a subject purchases any units of information, they observe a binary signal that corresponds to the state of the world with probability , and

increases in the number of units purchased. The marginal cost of units of information is increasing (see table 1). Signals are independent, conditional on the state of the world.

Note that for sufficiently extreme types, there is no incentive to purchase any information since a signal, regardless of how accurate, would not alter their vote.9In particular, if a subject’s type is weakly more than 75, they would vote for brown even if they knew the state of the world was purple. Analogously, a subject would vote for purple if their type is weakly less than 25, even if they know the state of the world was brown. To see this, suppose a subject knows that the state of the world is brown. They would still prefer to vote for purple if 50 + 𝑝 < 100 − 𝑝. That is, when 𝑝 < 25. The presence of such extreme partisans is a critical part of our experimental design since we are interested in misinformation. While such types may not purchase information, they do have an incentive to attempt to influence the votes of others.10Such extreme partisans are exactly the types who are likely to post misinformation or strategically withhold information that runs counter to their partisan preference. Further, these types are particularly likely to target posts for fact-checks that run counter to their partisan preferences.

After making their purchase decisions, subjects can communicate with other group members via a social media platform. The social media platform is a directed network like Twitter or Instagram. Each subject decides which group members they want to “follow,” and subjects can revise who they follow in each period, with the default being their choice from the preceding period.11In the first period, subjects follow all members of the group by default and can modify this structure before proceeding with the period. A subject will observe posts made by group members they follow. After learning which group members are following each other, each group member decides whether or not to make a post. A post contains up to three components:

A subject will observe posts made by group members they follow. After learning which group members are following each other, each group member decides whether or not to make a post. A post contains up to three components:

- Number of units of information purchased: 0–9

- Signal observed: brown, purple, or none

- Subject type (optional): 0–100

The number of units of information purchased, the signal observed, and the type may each or all be falsely reported by a subject. The subject’s type could also be omitted entirely.

All posts are subject to content moderation. We have six treatments, corresponding to six content moderation policies. Each policy is composed of a monitoring protocol, which varies how posts are monitored, and a consequence that is imposed when misinformation is detected. Subjects face one monitoring protocol in the first stage and another in the second. The consequence imposed on purveyors of misinformation is constant across stages and varied across sessions. The three monitoring protocols we consider are the following:

- P2P: Each subject has the option to fact-check any post shared by group members they follow. Checking a post costs 5 EF.

- PL: The computer fact-checks each post with a 20 percent probability.12There is no cost to group members if the “platform” conducts a fact-check so that the cost of fact-checks is not equal across monitoring protocols. This is an asset to our design since when real-world social media platforms fact-check user content, no costs are imposed on users of the platform, and we are interested in assessing content moderation policies as they would actually be implemented.

- P2P+PL: The computer fact-checks each post with a 20 percent Subjects can also fact-check any post for a cost of 5 EF, before observing which posts have been checked by the platform.

For all three monitoring protocols, each fact-checked post is automatically flagged as accurate only if the subject correctly states both the units of information purchased and the signal’s color. If either of these are reported inaccurately, the post is automatically flagged as inaccurate. The type revealed in the post is not fact-checked, and the results of fact-checks do not report which component of the post was inaccurate. The results from all fact-checks are shared publicly with everyone who can see the post on the platform.

The two consequences we consider are the following:

- FL: The result of each fact-check is flagged as described above, and no further actions are

- FL+PS: The result of each fact-check is flagged as described above. Additionally, if a subject’s post is flagged as inaccurate, the computer will automatically fact-check any post shared by that subject during the next two rounds.

After subjects observe the results from any fact-checks that occurred, they each vote for either brown or purple. Each subject then observes the group’s decision, the randomly assigned state of the world, and their own private payoff. Each player’s payoff for the round is composed of the partisan and nonpartisan payoffs described above, less any expenditure on units of information and P2P fact-checks.

Note that all of our treatments involve a content moderation policy. To assess the performance of a given content moderation policy, we compare our data against baseline environments from Pascarella et al. (2022). In Pascarella et al. (2022), subjects participated in three variations of the experimental environment described above but without ex-post content moderation. In particular, subjects in the study participated in treatments that either did not include a social media platform (none), included a platform that only allowed truthful posts (TR), or included a platform that allowed for both truthful and untruthful posts (MIS). These three treatments serve as benchmarks for the content moderation policies considered in this study, as the data from Pascarella et al. (2022) are directly comparable to the data from this study.13See footnote 4 for more details.

We ran a total of 12 sessions, with 6 sessions for each consequence. The 6 sessions that correspond to a consequence cover all the possible combinations and orders of monitoring protocols to control for order effects. The experiment was conducted at the ExCEN laboratory at Georgia State University in early March of 2020. A total of 180 subjects participated in the experiment across 12 sessions of 15 subjects each. The subjects were recruited using an automated system that randomly invited participants via e-mails from a pool of more than 2,400 students who signed up for participation in economic experiments. Each session was computerized using z-Tree (Fischbacher, 2007). Subjects were not allowed to communicate with each other than through the experiment’s social media platform.14To ensure privacy, each computer terminal in the lab was enclosed with dividers.

At the start of each stage, subjects were provided with a physical copy of the instructions, which were then read aloud by the experimenter.15See Appendix A for a sample set of instructions. After the instructions were read, each subject individually answered a series of quiz questions to ensure comprehension. Each session lasted for approximately 2 hours and 15 minutes. The average payoff was $19.50, with a range between $9.75 and $26.40.

Results

(Mis)information

We first report how the different content moderation policies in our experiment affect the amount of misinformation shared by subjects. Figure 1 reports the mean number of posts containing misinformation across treatments. Panel (a) reports the three treatments where the consequence is flagging, while panel (b) reports the three treatments where the consequence is flagging and persistent scrutiny. Both panels contain the baseline treatment in which social media does not have any content moderation policy (MIS).

Figure 1.

Of note, all content moderation policies successfully reduce the number of posts containing misinformation.16Each of the corresponding 𝑡-tests are highly significant, with 𝑝 < 0.001. In our analysis, we focus on group decisions since the interest of our study is to learn how content moderation policies affect aggregate outcomes. Our unit of observation is a group in a given round, and we report the results of two-tailed 𝑡-tests. While this is important, content moderation may be accompanied by a reduction in the 10 number of posts themselves, so it is possible that a content moderation policy reduces the number of posts containing misinformation, while the percentage of posts with misinformation actually increases. To investigate this possibility, table 2 reports summary statistics on the number of posts in a group, the share of posts containing misinformation, and the percentage of posts that were fact-checked by each available monitoring protocol.

Column 1 shows that the consequence faced for posting misinformation does affect the number of posts: persistent scrutiny reduces the number of posts relative to the case of flagging alone ().17For this test, we pooled the data across monitoring protocols. The results are analogous when considering all pairwise comparisons. However, the monitoring protocol does not affect the number of posts, holding the consequence fixed (Kruskal–Wallis, 𝑛.𝑠., in both cases).18When a test is not statistically significant at conventional levels, we denote this as 𝑛.𝑠. We next analyze the prevalence of misinformation as a percentage of total posts since the number of posts differs across consequences.19In Appendix B, we report summary statistics where the variable of interest is the percentage of the units of information purchased, including those shared inaccurately (column 3). The results presented here extend to these data as well. As seen in column 2, all content moderation policies reduce the proportion of posts containing misinformation relative to the full information baseline (

) for all comparisons).

Comparing the monitoring protocols, the percentage of posts containing misinformation is only marginally less in P2P than in PL when pooling across consequences (). However, the combination of these protocols (P2P+PL) outperforms both P2P (

) and PL (

). Thus, establishing multiple channels for fact-checking is likely to be most successful in reducing the percentage of posts containing misinformation. Furthermore, adding persistent scrutiny does not affect the percentage of posts containing misinformation when pooling across monitoring protocols.20Adding persistent scrutiny has no statistically significant effect within the PL or the P2P + PL monitoring protocols. Adding persistent scrutiny actually increases the percentage of posts containing misinformation when the detection method is P2P (𝑝 < 0.001). It is unclear what drives this increase. Even with this puzzling result, we can say that adding persistent scrutiny of purveyors of misinformation does not reduce the percentage of posts containing misinformation in our experiment. That is, a harsher penalty does not seem to yield any additional deterrence.

Quality of Group Decision-Making

We next report how content moderation policies affect the quality of group decision-making. We define quality of group decision-making as the frequency with which a group’s vote matches the state of the world. Table 3 reports summary statistics of this measure. Notice that the P2P monitoring protocol improves the quality of decisions relative to the absence of content moderation (the MIS baseline). This is true for both consequences ( in both cases). In fact, the quality of group decisions in the P2P monitoring protocol is not significantly different than the case in which misinformation is not possible (the TR baseline). That is, P2P is an effective monitoring protocol. However, the same cannot be said for the PL monitoring protocol. In particular, the quality of group decisions is not significantly different than in the MIS baseline and is lower than the TR baseline.21These results are all true under both consequences. The corresponding t-tests comparing the quality of group decision-making across PL and TR have 𝑝 < 0.05. In addition, the quality of group decision-making under PL is not statistically different from the scenario without social media (the NONE baseline) under either consequence.

The P2P+PL monitoring protocol follows the same pattern as P2P. The quality of decisions is improved relative to MIS ( in both cases) and is not statistically different from TR. That is, adding platform monitoring to the P2P monitoring protocol does not result in any gains. Similar to the results on the prevalence of misinformation, the addition of persistent scrutiny provides no benefit in terms of group decisions (𝑛.𝑠 for all comparisons).

Importantly, the results reported in this section are not driven only by the proportion of posts that are fact-checked at the group level. To see this, note that the rates of fact-checking reported in column 3 of table 2 are similar across the different content moderation policies.22To explore other factors that may influence group decision quality, we analyze panel linear probability models in Appendix C.

Welfare

P2P monitoring improves the quality of group decision-making. However, this improvement comes at a cost as subjects bear the expense of monitoring. Are the costs associated with improving group decisions offset by the increased earnings from improved group decision-making? To assess this, we now analyze group earnings (welfare).23We define welfare as the sum of the net payoff of all members in the group.

Table 4 decomposes average group payoffs into the payoffs associated with the voting outcome, information expenditures, and fact-checking expenditures. Column 4 shows that the costs associated with P2P monitoring are not sufficient to erase the gains. That is, in both P2P and P2P+PL, welfare is higher than the MIS baseline ( for both consequences) and is not significantly different than the TR baseline (again, for both consequences).

Turning to PL monitoring, we find that when there is no persistent scrutiny, the costless fact-checks provided by the platform are insufficient to improve welfare relative to the MIS baseline.24Not surprisingly, when misinformation is only flagged, PL monitoring results in lower welfare than in the TR baseline (𝑝 < 0.05). However, adding persistent scrutiny tips the scales; in this case PL improves welfare relative to the MIS baseline (). This is not surprising since persistent scrutiny results in additional no-cost fact-checking by the computer. However, it is important to note when comparing consequences, the addition of persistent scrutiny does not improve welfare relative to the case of only flagging. This is true for all monitoring protocols (𝑛.𝑠. in all cases).25Regression analysis yields similar results. See Appendix C for details.

Social Connectedness

Pascarella et al. (2022) show that in the absence of content moderation policies, misinformation degrades group decision-making, in part due to social connections decaying over time. Despite this, they find that activity on the social network, as measured by the number of posts, is not reduced by misinformation. Thus, a key question for this study is whether content moderation policies can improve the number of connections relative to the MIS baseline.

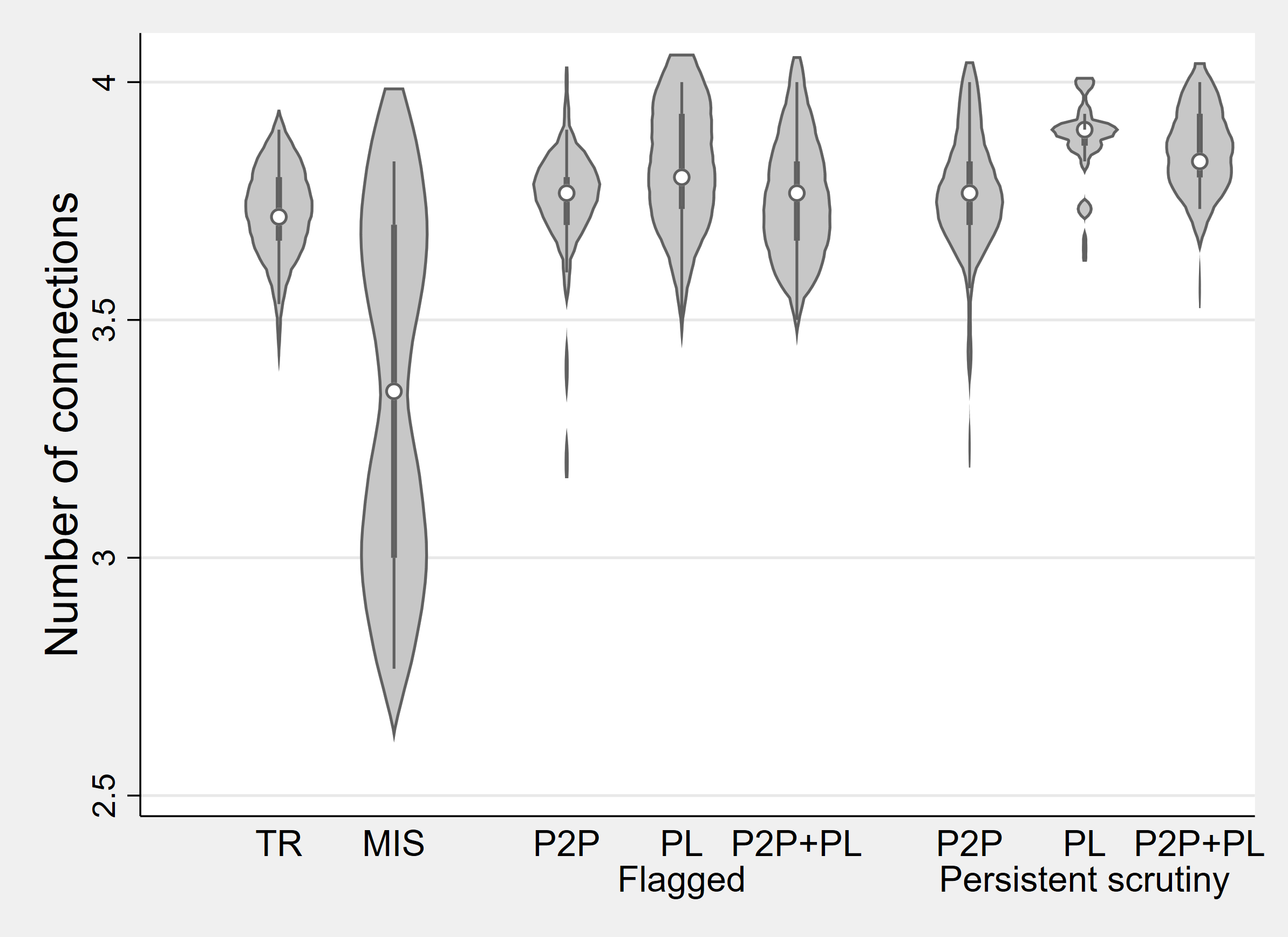

Panel (a) of figure 2 shows violin plots of the distributions of the number of connections in groups in the TR and MIS baselines as well as in the six content moderation policies we study. All six content moderation policies result in more connections than in the MIS baseline ( in all cases); that is, the introduction of content moderation increases engagement on the social media platform. Indeed, some content moderation policies increase the number of connections relative to the TR baseline.26For the PL monitoring protocols, this is significant for both consequences (𝑝 < 0.001, in both cases). For both P2P and P2P+PL with persistent scrutiny, this difference is significant (𝑝 < 0.001, in both cases). However, for both P2P and P2P+PL with only flagging, there is no significant difference. Panel (b) shows the violin plots for the number of posts. Note that there are no economically significant differences in the number of posts made within a group.27While some of the pairwise comparisons are statistically significant, the magnitudes of the differences are economically trivial.

Figure 2. Violin Plots of the Number of Connections and Posts

(a) Connections

(b) Posts

Discussion

Pascarella et al. (2022) find that introducing a social media platform can improve group outcomes, provided that misinformation is not permitted. When misinformation is permissible, all the gains from introducing a platform are erased, and group outcomes are worse than the scenario in which there is no social media platform at all.

We study whether content moderation policies can preserve the benefits of social media even when they may not be able to perfectly screen out all misinformation. In our experiments, a content moderation policy consists of a method of monitoring social media posts as well as a consequence that is imposed on identified purveyors of misinformation. We consider monitoring protocols in which social media users can pay a cost to initiate a fact-check, the platform fact-checks each post with a known probability, and a combination of these two. We consider two consequences for posting misinformation: flagging a post’s accuracy whenever a fact-check is performed and (in addition) persistently scrutinizing identified purveyors of misinformation.

All the content moderation policies we study reduce the level of misinformation on the social media platform relative to the case with no moderation. Further, they each reduce the share of posts that contain misinformation. The combined monitoring by peers and the platform performs the best, reducing the prevalence of misinformation by 22 percentage points (a 54 percent reduction relative to baseline). Notably, the addition of persistent scrutiny as a consequence of posting misinformation has no effect on the share of posts containing misinformation. This suggests, at a minimum, some diminishing returns to the punishments imposed on purveyors of misinformation. However, all policies considered do reach the primary goal of reducing the presence of misinformation on the platform.

Importantly, our design also allows us to speak to how content moderation policies affect group decisions and welfare, which would be unobservable outside of an experimental setting. We find that both group decisions and welfare are improved when peer-to-peer monitoring is available relative to when there is no moderation.28In fact, when peer monitoring is allowed, group decisions and welfare are not statistically different from the baseline with costless, perfect content moderation (the TR treatment).

That is, peer-to-peer monitoring, though costly for subjects to use, improves both decisions and welfare. Platform fact-checking has more mixed results. When used alone, it does not improve group decisions relative to the baseline with no moderation. It can improve welfare but only in the case with persistent scrutiny, where the computer is fact-checking close to 30 percent of posts.

Our research provides the first rigorous evaluation of content moderation policies and provides valuable insights in the fight against misinformation. Our results also highlight the value of laboratory studies: policy proposals can be tested in a controlled setting before being rolled out in the real world. This experiment can serve as a foundation for future studies on (mis)information-sharing and voting outcomes, and we believe there is much to be done in this promising area.

One important avenue for future research is to investigate whether our results are robust to changing the parameters implemented in our experiment. That is, it is important to conduct additional experiments that test the edge of the validity of our results, in the spirit of Smith (1982). In particular, a promising avenue would be to study content moderation policies with a higher cost of initiating a peer-to-peer fact-check as well as policies with more efficient platform fact-checks. Other mechanisms to curb misinformation could also be explored.

Appendix A: Sample Instructions

Appendix B: Composition of Information Shared

Appendix C: Regression

Quality of Group Decisions

We analyze factors that influence group decision quality in a panel linear probability regression analysis with the standard error clustered at the group level.29Logistic and probit regression models yield comparable results. Table 6 presents the results for the five models we estimate. The dependent variable is whether the group’s vote matched the correct policy. In columns 1 and 2, we include data from the fact-checking and the information-sharing protocols. The independent variables include a dummy for all the protocols; the excluded category is the peer-to-peer fact-checking when posts are flagged. We also control for the order in which the protocol was introduced and learning across periods.30The variable used to pick up order effects is a dummy variable for whether or not the protocol was the first protocol a participant participated in. Learning across periods is controlled for via 𝑙𝑜𝑔 (𝑡 − 1). In column 2, we include a variable to account for the total information purchased by groups.

In columns 3 and 4, we include data from all protocols where a social media platform was present to separate the platform’s effect on group decision quality. In column 3, in addition to the total information purchased, we control for the average number of connections on the platform. In column 4, we include two variables that account for the nature of information shared on the platform. First, units of information that are wasted due to the corresponding post containing misinformation. Second, we include a variable that accounts for the units of information strategically withheld. In column 5, we only include data from the fact-checking protocol and include a variable to account for the total number of fact-checked posts.

The overall quality of group decisions is not significantly different across the three fact-checking protocols for both the consequences but is significantly lower when there is no option to fact-check and when participants can share posts containing misinformation. Once we account for the total information purchased, we find that when only the platform is fact-checking and flagging posts, it leads to a lower quality of decision-making among the fact-checking protocols. Recall that, apart from platform fact-checking under persistent scrutiny, this leads to an increase in information purchased in the presence of a social media platform. The increase in the information purchased does not translate to an increase in the quality of the group decision when only the platform is fact-checking and when there is no option to fact-check in the MIS baseline.

In columns 3 and 4, we consider only the protocols where a social media platform is present. Recall that fact-checking protocol leads to an increase in the average number of connections relative to TR and MIS baselines. The increase in the average number of connections leads to an improvement in the quality of group decisions. Not surprisingly, we find that an increase in the information wasted due to misinformation lowers group decision quality. In column 5, we only consider the three fact-checking protocols and find that platform fact-checking when posts are flagged lowers the quality of decisions. Although the platform fact-checking, when there is persistent scrutiny of posts, leads to a lower quality of group decisions, these differences are not significant once we account for the lower information purchase.

Welfare

We also analyze the results in a linear panel regression analysis with the standard errors clustered at the group level. Table 7 presents the results for the models we estimate. The dependent variable of interest is the total group payoff. In columns 1 and 2, we include data from both the fact-checking and information-sharing protocols. The independent variables include a dummy for all the protocols; the excluded category is the peer-to-peer fact-checking when posts are flagged. Also, we control for the order in which the protocol was introduced and learning across periods. In column 2, we include a variable to account for the total information purchased by groups.

In columns 3 and 4, we include data from all protocols where a social media platform was present to separate the platform’s effect on group decision quality. In column 3, in addition to the total information purchased, we control for the average number of connections on the platform. In column 4, we include two variables that account for the nature of information shared on the platform. First, units of information that are wasted due to misinformation (where the corresponding post contains misinformation). Second, we include a variable that accounts for the units of information strategically withheld. In column 5, we only include data from the fact-checking protocol and include a variable to account for the total number of fact-checked posts.

The fact-checking protocols under the two consequences leads to higher total welfare relative to the MIS baseline where sharing misinformation is possible but there is no option to fact-check. These results are robust even after we account for the increase in the total information purchased in the presence of a social media platform. Not surprisingly, when we analyze the data in the presence of a social media platform, we find that having more connections on the platform increases total welfare, whereas units of information purchased wasted to misinformation lower the total welfare. Comparing the two consequences of fact-checking, we do not find any significant difference in welfare.

References

Barrera, O., Guriev, S., Henry, E., and Zhuravskaya, E. (2020). Facts, alternative facts, and fact checking in times of post-truth politics. Journal of Public Economics, 182:104123.

Elbittar, A., Gomberg, A., Martinelli, C., and Palfrey, T. R. (2016). Ignorance and bias in collective decisions. Journal of Economic Behavior & Organization.

Fischbacher, U. (2007). Z-tree: Zurich toolbox for ready-made economic experiments. Experimental Economics, 10(2):171–178.

Goeree, J. K. and Yariv, L. (2011). An experimental study of collective deliberation. Econometrica, 79(3):893–921.

Großer, J. (2018). Voting game experiments with incomplete information: A survey. Available at SSRN 3279218.

Grosser, J. and Seebauer, M. (2016). The curse of uninformed voting: An experimental study. Games and Economic Behavior, 97:205–226.

Guarnaschelli, S., McKelvey, R. D., and Palfrey, T. R. (2000). An experimental study of jury decision rules. American Political Science Review, 94(2):407–423.

Jun, Y., Meng, R., and Johar, G. V. (2017). Perceived social presence reduces fact-checking. Proceedings of the National Academy of Sciences, 114(23):5976–5981.

Le Quement, Mark Tand Marcin, I. (2019). Communication and voting in heterogeneous committees: An experimental study. Journal of Economic Behavior & Organization.

Meyer, J. and Rentschler, L. (2022). Abstention and informedness in nonpartisan elections. Working paper.

Nieminen, S. and Rapeli, L. (2019). Fighting misperceptions and doubting journalists’ objectivity: A review of fact-checking literature. Political Studies Review, 17(3):296–309.

Nyhan, B., Porter, E., Reifler, J., and Wood, T. J. (2019). Taking fact-checks literally but not seriously? the effects of journalistic fact-checking on factual beliefs and candidate favorability. Political Behavior, pages 1–22.

Pascarella, J., Mukherjee, P., Rentschler, L., and Simmons, R. (2022). Social media, (mis)information, and voting decisions. Working paper.

Pogorelskiy, K. and Shum, M. (2019). News we like to share: How news sharing on social networks influences voting outcomes. Available at SSRN 2972231.

Robbett, A. and Matthews, P. H. (2018). Partisan bias and expressive voting. Journal of Public Economics, 157:107–120.