1 Introduction: A Primer on Artificial Intelligence

Artificial intelligence (AI) has been a topic of increasing public discussion and debate over the last decade. AI is commonly referred to as a technology, a catch-all for the set of technologies and designs that make AI possible.1Matthew Mittelsteadt, AI Policy Guide, Mercatus Center at George Mason University, April 2023, accessed July 27, 2023, https://www.mercatus.org/ai-policy-guide. In the broadest sense, AI applies to any technique that enables computer software to mimic human intelligence.2Matt Vella, “How AI is Transforming Our World, in TIME,” Artificial Intelligence: The Future of Humankind (Time Inc. Books: New York, 2017), 4–7. Such AI employs logic, if-then rules, decision trees, and machine learning—the latter includes statistical techniques usually applied to large data sets.3Vella, “How AI is Transforming Our World.” These data sets enable the computer software to improve at tasks through the application of learning algorithms made up of artificial neural networks and large-scale, probabilistic reasoning systems, resulting in the AI software engaging in experiential “deep learning.”4Vella, “How AI is Transforming Our World.”

There are three generally accepted definitions of the scope of AI:5Timothy E. Carone, Future Automation: Changes to Lives and to Businesses (World Scientific Publishing Co.: Singapore, 2018); Vella, “How AI is Transforming Our World,” 910.

- Artificial Narrow Intelligence (ANI): ANI (also referred to as “weak” AI) is often a part of the computational statistics area of study. (Although in its most brittle symbolic forms, it is largely divorced from statistics.) A firm utilizing ANI is using a specific AI to support a specific process with well-defined rules. In human terms, these systems have no intelligence or common sense. The ANI system is software based on computational statistics used to create models that can help human beings or other machines make decisions at ever-increasing speeds. This is an AI level that presently exists in commercial applications—including GPT-4. While these technologies are an advancement in ANI, they still lack the cognitive abilities and other “human-like” attributes required to be classified as “artificial general intelligence.”

- Artificial General Intelligence (AGI): AGI (“strong” AI) is a term coined by physicist Mark Gubrud in 1997.6Avi Gopani, “The Never Ending Debate on AGI,” Analytics India Magazine, May 27, 2022, accessed July 27, 2023, https://analyticsindiamag.com/the-never-ending-debate-on-agi/. It describes software that has cognitive abilities similar to humans. It has a “sense” of consciousness and is typically equated to human-like ability in terms of learning, thinking, and processing information for decision-making. An AGI equates to encapsulating a life of human experiences in a specific domain of knowledge and translating it into terabytes of training data.

In the case of AGI, this definition would in the recent past usually pass the Turing test, which qualifies an AI to be labeled as intelligent, in that it operates so proficiently that human observers could not distinguish its behavior from a human’s behavior. However, since finding that the Turing test essentially measures only “deception,” large language developers now utilize the General Language Understanding Evaluation (GLUE) or the Stanford Question Answering Dataset (SQuAD) as a benchmark to measure AGI.7Will Oremus, “Google’s AI Passed a Famous Test — and Showed How the Test Is Broken,” The Washington Post, June 17, 2022, accessed July 27, 2023, https://www.washingtonpost.com/ technology/2022/06/17/google-ai-lamda-turing-test/. This is an AI level not yet technologically feasible, but it is anticipated to be so in the future.8Henry A. Kissinger, Eric Schmidt, and Daniel Huttenlocher, The Age of AI and Our Human Future (Hachette Book Group: New York, 2021), 56. - Artificial Super Intelligence (ASI): ASI (often referred to as “existential” in nature because of potentially cataclysmic risks to the future existence of humanity) is human-level intelligence executed a thousand times faster and viewed as decades away from arriving—if ever at all.

AI software is characterized by some economists as a “general purpose technology,”9Nicholas Crafts, “Artificial Intelligence as a General-Purpose Technology: An Historical Perspective,” Oxford Review of Economic Policy 37, no. 3, (September 1, 2021): 521–536, https://doi.org/10.1093/oxrep/grab012; Juan Mateos-Garcia, “The Economics of AI Today,” The Gradient, January 18, 2020, accessed July 27, 2023, https://thegradient.pub/the-economics-of-ai-today/. with ANI today embedded in a range of industries, firms, and products and services across the United States.10Erik Brynjolfsson and Andrew McAfee, “The Business of Artificial Intelligence,” Harvard Business Review, July 18, 2017, accessed May, 15, 2023, https://hbr.org/2017/07/the-business-of-artificial-intelligence. For example, the chemical basis of photography has been replaced with digital photography.11Marco Iansiti and Karim R. Lakhani, Competing in the Age of AI: Strategy and Leadership When Algorithms and Networks Run the World, Harvard Business Review Magazine, January–February 2020, 5–8. Instead of Kodak (a traditionally chemistry-based firm) remaining a dominant player in the photography industry, today this industry is dominated by new social platform entrants, including Google and Facebook. Google and Facebook technologies utilize photo apps (and ANI) to recommend products, services, and news feeds that users might like as well as friend recommendations to introduce a person to someone based on shared affinities or backgrounds.

Kodak’s demise was not due to a digital photography start-up venture, but by the emergence of smartphones and social network firms and the underlying developments in ANI. Likewise, in the personal digital assistant (PDA) and smart appliance market space, many companies have been actively developing AI assistants. One of the most widely known is the Siri voice recognition system, introduced in October 2011 by Apple in its iPhone 4S. Over the last decade, Siri has been integrated into a variety of other Apple products, from the iPad to Apple TV to AirPods.12Artificial Intelligence: Its Perils and Its Promise (New York Times Magazine: New York NY, 2020), 8. As these PDAs—also offered by Amazon, Google, Microsoft, and Google, to name a few major manufacturers—have expanded product capabilities, additional interest has risen in how these ANI-based technologies are most efficaciously used.

ANI functionality has, by and large, been a natural progression of technologies. It started with companies using basic speech recognition and voice processing for addressing entry level customer service and phone routing. This original functionality was rapidly eclipsed by ANI development with advanced machine learning and access to a wealth of information available on the internet by which to “train” or “learn” on.

For example, ChatGPT is a large language model (LLM) and generative AI, which means its algorithms can be used to create new content, including audio, code, images, text, simulations, and videos.13“What is Generative AI?” McKinsey & Company, January 19, 2023, accessed July 29, 2023. https://www.mckinsey.com/featured-insights/mckinsey-explainers/what-is-generative-ai. ChatGPT has made recent headlines after the developer made it freely available to the public. Since the release of ChatGPT, the public has started testing the limits of this software by having the chatbot write essays, answer questions on college level exams, complete complex programming, and in the case of ChaosGPT (a ChatGPT derivative), even plot for the extinction of the human species (thankfully, that has not gone well).14Karen Hao, “What Is ChatGPT? What to Know About the AI Chatbot,” The Wall Street Journal, February 8, 2023, May 10, 2023, https://www.wsj.com/articles/chatgpt-ai-chatbot-app-explained-11675865177. Richard Pollina, “AI Bot, ChaosGPT Tweet Plans to ‘Destroy Humanity’ After Being Tasked,” New York Post, April 11, 2023, accessed May 10, 2023, https://nypost.com/2023/04/11/ai-bot-chaosgpt-tweet-plans-to-destroy-humanity-after-being-tasked/.

Google recently announced the launch of Bard, a ChatGPT rival, and Microsoft plans on including its version of generative AI in Bing.15Rebecca Klar, “The AI Arms Race is On, Are Regulators Ready?” The Hill, February 14, 2023, accessed May 12, 2023, https://thehill.com/policy/technology/3856207-the-ai-arms-race-is-on-are-regulators-ready/.

Though this ANI advancement is exciting and presents lucrative future opportunities, it is also not without perceived technological risk. In March 2022, Pew Research Center surveyed US adults asking them to respond to a series of specific AI applications. When asked if certain applications were a good or bad idea for society, 56% of respondents said computer chip implants in the human brain would be bad; 44% said AI in driverless passenger vehicles would be a bad idea; and 31% said AI algorithms used by social media companies to find false information on their sites would also be bad for society.16Lee Rainie, Cary Funk, Monica Anderson, and Alex Tyson, AI and Human Enhancement: Americans’ Openness is Tempered by a Range of Concerns, Pew Research Center, March 17, 2022, accessed May 12, 2023, https://www.pewresearch.org/internet/2022/03/17/ai-and-human-enhancement-americans-openness-is-tempered-by-a-range-of-concerns/.

A May 2023 survey conducted by Change Research for the Tech Oversight Project reports that 54% of Americans want Congress to pass legislation that ensures AI is regulated to protect privacy, is fairly applied, and recognizes the importance of safety while maximizing its benefits and minimizing its risks to society.17“Tech Oversight Project/Change Research National Poll: Americans Support Congressional AI Action, Tech Oversight Project,” May 4, 2023, accessed May 13, 2023, https://techoversight.org/2023/05/04/tech-oversight-project-change-research-national-poll-americans-support-congressional-ai-action/.

In truth, there is evidence of actual risks from embracing AI: “automation spurred job loss, privacy violations, deepfakes, algorithmic bias due to bad data, socioeconomic inequality, market volatility, and weapons automation.”18Mike Thomas, “8 Risks and Dangers of Artificial Intelligence (AI),” builtin, January 25, 2023, accessed July 27, 2023, https://builtin.com/artificial-intelligence/risks-of-artificial-intelligence.

While technology companies are working to enhance the capabilities of ANI to more robustly and effectively address commercial opportunities, as a society we should also be looking into how we expect ANI, and more broadly AI, to operate. What boundary conditions or “guardrails” do we wish to place on AI applications? And what public and/or private governance policy framework needs to be established to protect society from misinformation, bias, and plagiarism? How can we protect society while at the same time preserving the private sector’s ability to commercially innovate societal and technological benefits in the AI market space, including in medicine, advanced manufacturing, and autonomous vehicles, among others?

Given this introduction to AI and the emerging issues in its commercial development and implementation, this paper will explore the following research question: What kind of governance framework would best manage AI risks and promote its benefits for American society?

2 Regulatory Governance Models

In the policy arena, the term governance implies regulation. It also refers to a modern, flexible understanding of institutional control systems and indirect mechanisms intended to meet societal goals and objectives.19Robert Baldwin, Martin Cave, and Martin Lodge, “Introduction: Regulation – The Field and the Developing Agenda,” in The Oxford Handbook of Regulation, Robert Baldwin, Martin Cave, and Martin Lodge, eds. (Oxford University Press: Oxford, 2010, 3–16), https://doi.org/10.1093/oxfordhb/9780199560219.003.0001. In general, there are two forms of governance: public governance and private governance.

Public governance (also referred to as “hard law” governance) “consists of the formal and informal rules, procedures, practices, and interactions within the State and between the State, non-state institutions and citizens, that frame the exercise of public authority and decision-making in the public interest.”20OECD, “The Values of Sound Public Governance,” in Policy Framework on Sound Public Governance: Baseline Features of Governments that Work Well, December 22, 2020, accessed May 11, 2023, https://www.oecd-ilibrary.org/governance/policy-framework-on-sound-public-governance_c03e01b3-en. Wiener notes that government regulation, i.e., public governance, may inhibit or stimulate technological change. This relationship and outcome are a result of the regulatory choice and design of policy.21Jonathan B. Wiener, “The Regulation of Technology, and the Technology of Regulation,” Technology in Society: An International Journal 26, no. 2–3 (April 1, 2004): 483–500, https://doi.org/10.1016/j.techsoc.2004.01.033.

Private governance is a component of “soft law” governance, a concept that includes a broad spectrum of potential governance tools, many of which can be government influenced or created. It describes the various forms of private enforcement, self-governance, self-regulation, or informal mechanisms that private individuals and organizations (as opposed to government) use to create order, facilitate exchange, and protect property rights.22Edward Peter Stringham, Private Governance: Creating Order in Economic and Social Life (Oxford University Press: Oxford and New York, 2015, 3–4). Marchant and Allenby explain that soft law approaches “include instruments or arrangements that create substantive expectations that are not directly enforceable, unlike hard law requirements such as treaties and statutes.”23Gary E. Marchant and Braden Allenby, “Soft Law: New Tools for Governing Emerging Technologies,” Bulletin of the Atomic Scientists 73, no. 2 (2017): 112. As Gutierrez and Marchant note:

Compared to its hard law counterparts, soft law programs are more flexible and adaptable, and any organization can create or adopt a program. Once programs are created, they can be adapted to reactively or proactively address new conditions. Moreover, they are not legally tied to specific jurisdictions, so they can easily apply internationally. Soft law can serve a variety of objectives: it can complement or substitute hard law, operate as a main governance tool, or as a back-up option. For all these reasons, soft law has become the most common form of AI governance.24Carlos Ignacio Gutierrez and Gary E. Marchant, “How Soft Law is Used in AI Governance,” Brookings Institution, May 27, 2021, https://www.brookings.edu/articles/how-soft-law-is-used-in-ai-governance/.

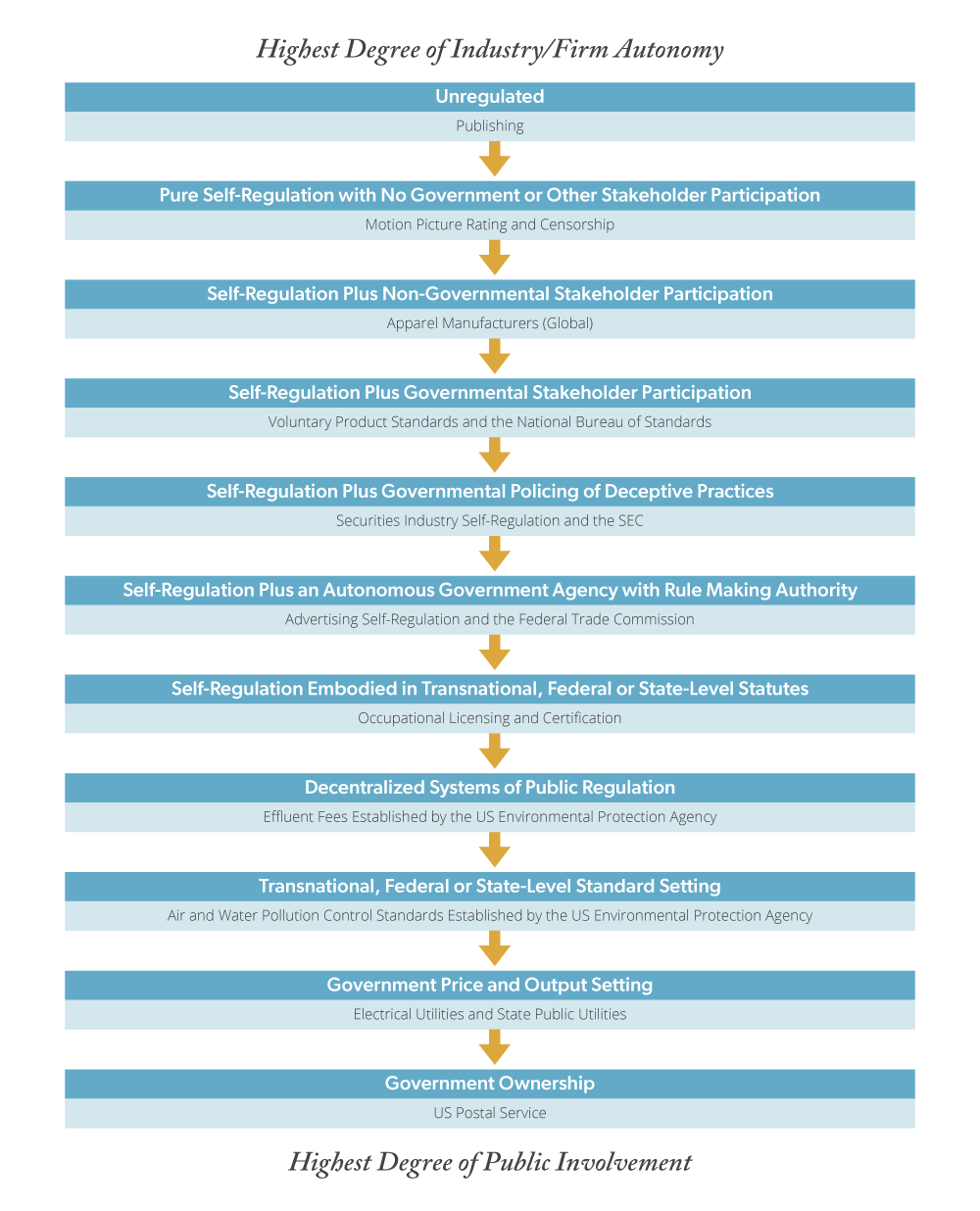

When applied to the commercial sector and specifically, to industries and/or fundamental, cross-industry technologies in the American regulatory state, there is a taxonomy of governance models that range from one end of the regulatory spectrum (e.g., unregulated industries governed by market forces) to the polar opposite (e.g., direct government ownership).25Michael Moran, “The Rise of the Regulatory State” (Chapter 16), in The Oxford Handbook of Business and Government, David Coen, Wyn Grant, and Graham Wilson, eds. (Oxford University Press: Oxford and New York, 383–403, 2010). Figure 1, an Industry Regulatory Spectrum describes: 1) the specific form of regulation; 2) a progression from one end of the vertical spectrum (Private Autonomy) to the other (Public Involvement); and 3) an example of a corresponding industry or policy mechanism of each specific form of regulation.26David A. Garvin, “Can Industry Self-Regulation Work?” California Management Review 25, no. 4 (1983): 37–52; Thomas A. Hemphill, “Self-Regulation, Public Issue Management and Marketing Practices in the U.S. Entertainment Industry,” Journal of Public Affairs 3, no. 4 (2003): 338–357, https://doi.org/10.1002/pa.162.

Figure 1. Industry Regulatory Spectrum (Form and Example of Governance)

The term “self-regulation” in the context of private governance is a critical concept in the Industry Regulatory Spectrum. As Hemphill explains, “self-regulation exists when a firm, an industry, or the business community establishes its own standards of behavior (1) where no such statutory or regulatory requirements exist or (2) when such standards assist in complying with or exceeding statutory or regulatory requirements.”27Thomas A. Hemphill, “Self-Regulating Industry Behavior: Antitrust Limitations and Trade Association Codes of Conduct,” Journal of Business Ethics 11, no. 12 (1992): 915, https://doi.org/10.1007/BF00871957.

Furthermore, self-regulation is voluntary, it covers behavior that is discretionary and usually refers to a code of conduct as the document that clarifies sanctioned policies and standards.28Anil K. Gupta and Lawrence J. Lad, “Industry Self-Regulation: An Economic, Organizational and Political Analysis,” Academy of Management Review 8, no.3 (1983): 416–425, 1983. Self-regulation at the industry-level is defined by Gupta and Lad as “a regulatory process whereby an industry-level organization (such as a trade association) sets and enforces rules and standards, relating to the conduct of firms in the industry.”29Gupta and Lad, “Industry Self-Regulation,” 417. Academic researchers recommend that the greatest potential for industry self-regulation lies with a mixed form of industry rule-making and government oversight.30Gupta and Lad, “Industry Self-Regulation;” Garvin, “Can Industry Self-Regulation Work?”

Guidelines, standards, and codes of conduct are some soft law mechanisms that are embraced, but there are also “stealth” administrative rule-making activities, including guidance documents, interpretive rules, and general policy statements.31David L. Franklin, “Legislative Rules, Nonlegislative Rules, and the Perils of the Shortcut,” Yale Law Journal 120, no. 2 (2010): 276–326, https://www.yalelawjournal.org/pdf/913_du1o1t57.pdf 2010; John D. Graham and James Broughel, “Confronting the Problem of Stealth Regulation,” Mercatus Policy, Mercatus Center at George Mason University, April 2015; Marchant and Allenby, “Soft Law.” Moreover, says Abbott, soft law governance approaches, such as “information disclosures, codes of conduct, and certification mechanisms,” have the innate advantage of being “adopted and revised much more rapidly than formal regulations.”32Kenneth W. Abbott, “Introduction: The Challenges of Oversight for Emerging Technologies,” Innovative Governance Models for Emerging Technologies, Gary E. Marchant, Kenneth W. Abbott, and Braden Allenby, eds., (Edward Elgar Publishing: Northampton, MA, 2014, 6).

In contrast, an inherent weakness of soft law is that it may successfully justify bad behavior more rapidly than a formal regulatory process. Because soft law governance lacks effective enforcement mechanisms, it must rely on aligning clearly defined and well-understood incentives with stakeholders.33Gutierrez and Marchant, “How Soft Law is Used in AI Governance.” Thus, for effectively managing the risks of AI, it is critical that stakeholders consider the inclusion of implementation mechanisms and appropriate incentives.34Gutierrez and Marchant, “How Soft Law is Used in AI Governance.”

Unlike laws and regulations, which are governed by the political jurisdiction of the government that enacts them, soft law in the form of international norms and standards potentially can exert a strong influence without raising extraterritoriality concerns.35John Villasenor, “Soft Law as a Complement to AI Regulation,” Brooking Institution, July 31, 2020, accessed July 27, 2023, https://www.brookings.edu/articles/soft-law-as-a-complement-to-ai-regulation/. As Peter Cihon of the Future of Humanity Institute at the University of Oxford explains: “[AI] presents novel policy challenges that require coordinated global responses. Standards, particularly those developed by existing international standards bodies, can support the global governance of AI development.”36Peter Cihon, Standards for AI Governance: International Standards to Enable Global Coordination in AI Research & Development, Future of Humanity Institute, University of Oxford, April 2019, 2.

Will all countries agree to follow these international AI standards, especially if it is not in their best economic, military, or political interests? Obviously not, and this could result in restrictions on international trade (including data transfers) between the countries abiding by such international standards versus the countries not abiding by these international standards.

Given this brief introduction to regulatory governance models, this Industry Regulatory Spectrum will be useful in analyzing recent AI public and private governance proposals. It will also help illuminate the important role that such private governance frameworks have in optimizing the trade-offs between AI risks and benefits of such innovation for American society.

3 Artificial Intelligence: Public Governance Proposals

During the final year of the Trump administration, the first major public governance AI initiative was adopted by the federal government. In February 2020, the US Department of Defense (DOD) adopted its AI Ethical Principles “designed to enhance the department’s commitment to upholding the highest ethical standards as outlined in the DOD AI Strategy.”37“DOD Adopts Ethical Principles for Artificial Intelligence,” US Department of Defense, February 24, 2020, accessed July 28, 2023, https://www.defense.gov/News/Releases/release/article/2091996/dod-adopts-ethical-principles-for-artificial-intelligence/. According to the press release, “The DOD Joint Artificial Intelligence Center will be the focal point for coordinating implementation of AI ethical principles for the department,”38“DOD Adopts Ethical Principles,” US Department of Defense. and “the DOD’s AI Ethical Principles build on the US military’s existing ethics framework based on the US Constitution, Title 10 of the US Code, Law of War, existing international treaties and longstanding norms and values.”39“DOD Adopts Ethical Principles,” US Department of Defense.

The DOD’s AI Ethical Principles encompass five major areas:

- DOD personnel will exercise appropriate levels of judgment and care, while remaining responsible for the development, deployment, and use of AI capabilities.

- The Department will take deliberate steps to minimize unintended bias in AI capabilities.

- The Department’s AI capabilities will be developed and deployed such that relevant personnel possess an appropriate understanding of the technology, development processes, and operational methods applicable to AI capabilities, including with transparent and auditable methodologies, data sources, and design procedure and documentation.

- The Department’s AI capabilities will have explicit, well-defined uses, and the safety, security, and effectiveness of such capabilities will be subject to testing and assurance within those defined uses across their entire life-cycles.

- The Department will design and engineer AI capabilities to fulfill their intended functions while possessing the ability to detect and avoid unintended consequences, and the ability to disengage or deactivate deployed systems that demonstrate unintended behavior.40“DOD Adopts Ethical Principles,” US Department of Defense.

However, since the fall of 2022, there has been a flurry of public policy activity at the national level concerning AI. In October 2022, the White House Office of Science and Technology Policy (OSTP) released the Blueprint for an AI Bill of Rights (Blueprint), a voluntary guide that identifies five principles to assist firms and industries in the design, development, and deployment of AI products and other automated systems so that such systems protect the rights of the American public.41“Blueprint for an AI Bill of Rights: Making Automated Systems Work for the American People,” The White House, Office of Science and Technology Policy, October 4, 2023, accessed May 13, 2023, https://www.whitehouse.gov/ostp/ai-bill-of-rights/.

The Blueprint’s five principles protecting the American public consist of the following:

- Safe and Effective Systems: Americans should be protected from unsafe or ineffective systems.

- Algorithm Discrimination Protection: Americans should not face discrimination by algorithms, and systems should be used and designed in an equitable way.

- Data Privacy: Americans should be protected from abusive data practices via built-in protections and have agency over how data about them is used.

- Notice and Explanation: Americans should know that an automated system is being used and understand how and why it contributes to outcomes that impact them.

- Human Alternatives, Consideration, and Fallback: Americans should be able to opt-out, where appropriate, and have access to a person who can quickly consider and remedy problems they encounter.42“Blueprint for an AI Bill of Rights” The White House.

The Blueprint shares much in common with The Organisation for Economic Co-operation and Development’s (OECD) Recommendations on Artificial Intelligence (Recommendations).” The US government, an OECD member, specifically the US Department of State,43“Artificial Intelligence (AI),” US Department of State, accessed July 28, 2023, https://www.state.gov/artificial-intelligence/. was a leader in the effort to establish the Recommendations, which were adopted by the OECD Council on May 22, 2019. Like the Blueprint, it includes five values-based principles:

- inclusive growth, sustainable development and well-being;

- human-centered values and fairness;

- transparency and explainability;

- robustness, security and safety;

- accountability.44The Organisation for Economic Co-operation and Development (OECD), Recommendation of the Council on Artificial Intelligence, OECD Legal Instruments, 2022, https://legalinstruments.oecd.org/api/print?ids=648&lang=en.

The US Department of State’s definition of AI is “a machine-based system that can, for a given set of human-defined objectives make predictions, recommendations or decisions influencing real or virtual environments,” and is included in the National Artificial Intelligence Act of 2020.45“Artificial Intelligence (AI),” US Department of State. In addition, the State Department played a pivotal role in establishing the voluntary Global Partnership on Artificial Intelligence (GPAI), initiated in June 2020 as a multi-stakeholder effort to advance AI while recognizing the importance of human rights and democratic values in its development.46“Artificial Intelligence (AI),” US Department of State. Furthermore, the Department of State has played a critical role in guiding GPAI and ensuring it complements the work of the OECD.47“Artificial Intelligence (AI),” US Department of State.

In January 2023, the National Institute of Standards and Technology released its Artificial Intelligence Risk Management Framework (AI RMF), a required legislative charge of the National AI Initiative Act of 2020.48US Department of Commerce, Artificial Intelligence Risk Management Framework (AI RMF 1.0), National Institute of Standards and Technology, January 2023, https://nvlpubs.nist.gov/nistpubs/ai/NIST.AI.100-1.pdf. According to the website, “The Framework is intended to be voluntary, rights-preserving, non-sector specific, and use-case agnostic, providing flexibility to organizations of all sizes and in all sectors and throughout society to implement the approaches in the Framework.”49US Department of Commerce, Artificial Intelligence Risk Management Framework (emphasis added). The characteristics of trustworthy AI systems addressed in the AI RMF include that they be valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with their harmful biases managed.50US Department of Commerce, Artificial Intelligence Risk Management Framework.

When Congress passed the National AI Initiative Act in 2020, it directed the National Science Foundation to work with the White House Office of Science and Technology Policy (OSTP), to:

Establish a task force to create a roadmap for a National AI Research Resource (NAIRR)—a shared research infrastructure that would provide AI researchers and students with significantly expanded access to computational resources, high-quality data, educational tools, and user support.51National Artificial Intelligence Research Resource Task Force (NAIRR), Strengthening and Democratizing the U.S. Artificial Intelligence Innovation Ecosystem: An Implementation Plan for a National Artificial Intelligence Research Resource, National Science Foundation and Office of Science and Technology Policy, January 2023, https://www.ai.gov/wp-content/uploads/2023/01/NAIRR-TF-Final-Report-2023.pdf.

Key recommendations in the task force’s January 2023 report include first, that “the NAIRR should be established with four measurable goals in mind, namely to (1) spur innovation, (2) increase diversity of talent, (3) improve capacity, and (4) advance trustworthy AI.”52NAIRR, Strengthening and Democratizing. Second, the NAIRR should set the standard for responsible AI research through the design and implementation of its governance processes, and implement system safeguards in accordance with established guidelines.53NAIRR, Strengthening and Democratizing.

In April 2023, officials from the Consumer Financial Protection Bureau, Equal Employment Opportunity Commission, Federal Trade Commission, and the US Department of Justice released a “Joint Statement on Enforcement Efforts Against Discrimination and Bias In Automated Systems” (Statement).54Rohit Chopra, Kristen Clarke, Charlotte A. Burrows, and Lina M. Khan, “Joint Statement on Enforcement Efforts Against Discrimination and Bias in Automated Systems,” April 25, 2023, https://www.ftc.gov/system/files/ftc_gov/pdf/EEOC-CRT-FTC-CFPB-AI-Joint-Statement%28final%29.pdf. These are among the federal agencies responsible for enforcing civil rights, non-discrimination, fair competition, consumer protection, and other vitally important legal protections.55Chopra, Clarke, Burrows, and Khan, “Joint Statement on Enforcement Efforts.” In this Statement, these agencies reiterate their “resolve to monitor the development and use of automated systems [including AI] and promote responsible innovation,” while pledging “to vigorously use [their] collective authorities to protect individuals’ rights regardless of whether legal violations occur through traditional means or advanced technologies.”56Chopra, Clarke, Burrows, and Khan, “Joint Statement on Enforcement Efforts.”

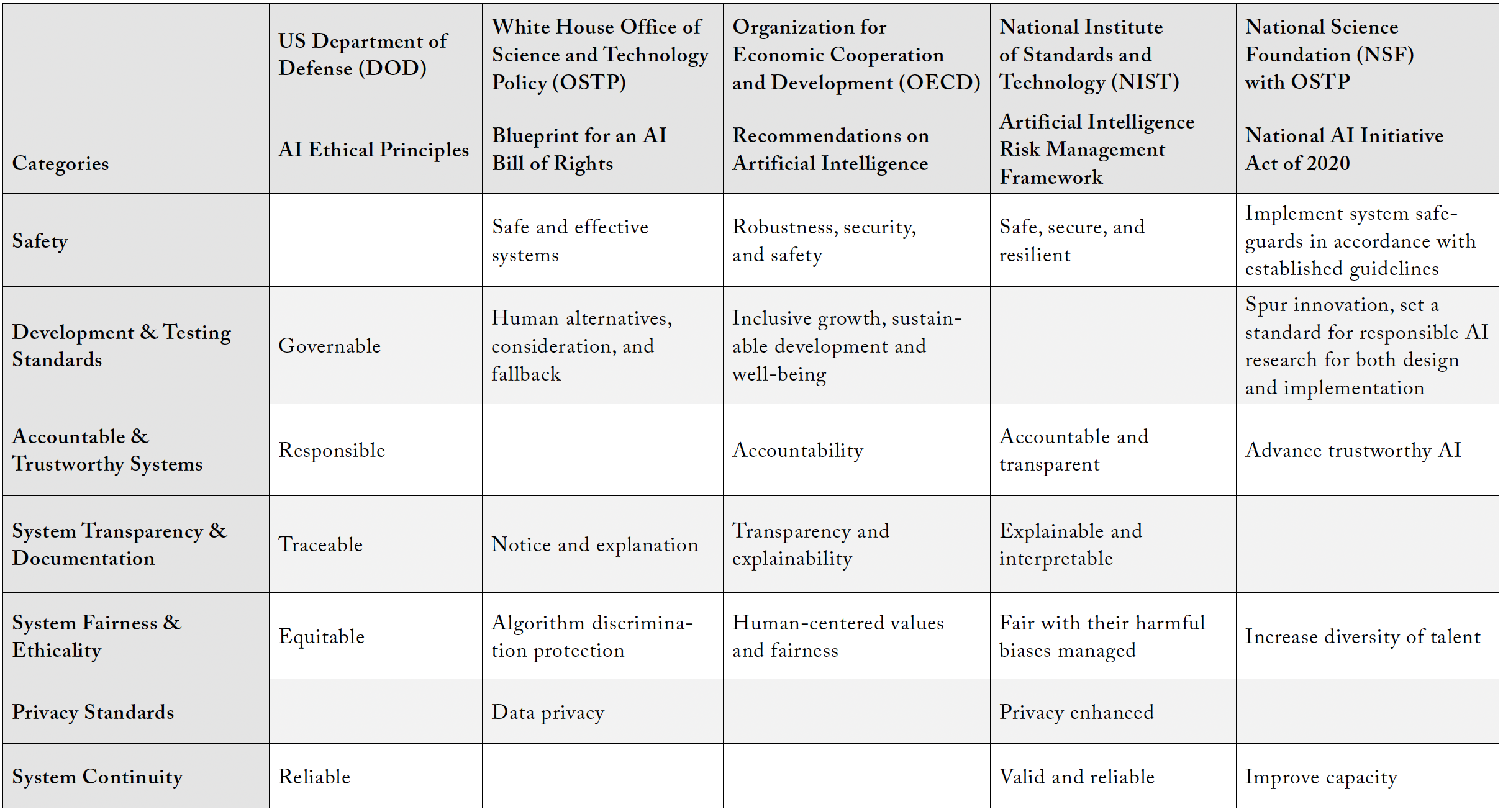

In this paper, an affinity diagram is employed to help identify and clarify important AI policy principles (patterns of ideas) identified with the above five key public AI governance initiatives recently adopted (see table 1, Artificial Intelligence Policy Principles: Public Entities). Developed by Jiro Kawakita, the affinity diagram, or “KJ method,” is an analytic tool used to organize ideas or attributes with underlying similarity into subgroups with common themes. It helps to identify patterns and establish related groups that exist in qualitative datasets.57“The Affinity Diagram Tool,” Six Sigma Daily, December 25, 2012, accessed May 17, 2023, https://www.sixsigmadaily.com/the-affinity-diagram-tool/; Scott M. Shafer, H. Jeff Smith, and Janice C. Linder, “The Power of Business Models,” Business Horizons 48(3): 199–207, 2005.

Table 1. Artificial Intelligence Policy Principles: Public Entities

What useful commonalities can be derived from table 1? It is readily apparent that public governance AI policy principles are focused on five categories. For instance, the category of System Fairness & Ethicality is universal among all five public entities. Following closely behind with four public entities are the categories of

- Safety (OSTP, OECD, NIST, and NSF with OSTP),

- Development and Testing Standards (DOD, OSTP, OECD, and NSF with OSTP),

- Accessible & Trustworthy Systems (DOD, OECD, NIST, and NSF with OSTP), and

- System Transparency & Documentation (DOD, OECD, NIST, and NSF with OSTP).

System Continuity has three public entities represented (DOD, NIST, and NSF with OSTP), and Privacy Standards has two public entities represented (OSTP and NIST). While privacy standards are directly attributed to only two public entities, it is safe to assume that “privacy” is implicitly present in some form among the other three public entities’ initiatives.

In Congress, there has been significant recent discussion on initiating congressional task forces or commissions on developing national rules on AI implementation. Senate Majority Leader Charles Schumer (D-NY) has released a proposal for legislation in the 118th Congress that would include four “guardrails” for AI technology: identifying who trained the AI algorithm, the intended audience, its data sources, and response mechanisms, as well as strong, transparent ethical boundaries. However, there has been no serious legislation yet progressing toward enactment in either chamber in the first half of 2023. This is partially due to a significant knowledge gap on this subject that needs to be transcended amongst legislators.58“Schumer Launches Major Effort to Get Ahead of Artificial Intelligence,” Senate Democrats Newsroom, April 12, 2023, accessed May 13. 2023, https://www.democrats.senate.gov/newsroom/press-releases/ schumer-launches-major-effort-to-get-ahead-of-artificial-intelligence; Brendan Bordelon and Mohar Chatterjee, “‘It’s Got Everyone’s Attention’: Inside Congress’s Struggle to Rein in AI,” POLITICO, May 4, 2023, accessed May 12, 2023, https://www.politico.com/news/2023/05/04/congresss-scramble-build-ai-agenda-00095135. There were a number of legislative proposals addressing AI introduced in the 117th Congress, including the Algorithmic Accountability Act, the American Data Privacy and Protection Act (which included AI regulatory provisions), the Algorithmic Justice and Online Platform Transparency Act, and the Protecting Americans from Dangerous Algorithm Act, but none were enacted into law before Congress adjourned.59Anna Lenhart, Federal AI Legislation: An Analysis of Proposals from the 117th Congress Relevant to Generative AI Tools, Institute for Data Democracy & Politics, The George Washington University, June 2023, accessed July 26, 2023, https://iddp.gwu.edu/sites/g/files/zaxdzs5791/files/2023-06/federal_ai_legislation_v3.pdf.

Presently, there are two ideological “camps” on Capitol Hill: one that wants to move quickly and establish national rules on AI implementation, and a second that wants to go slow, learn more about AI technology, and have an informed legislative response that does not create barriers to innovation—especially in the critical healthcare and biotechnology industries.60Bordelon and Chatterjee, “‘It’s Got Everyone’s Attention.’” Lawmakers in both chambers are expected to reintroduce legislation in the 118th Congress, specifically the Algorithmic Accountability Act and the Algorithmic Justice and Online Platform Transparency Act, both of which include regulatory frameworks addressing the risks associated with AI.61Michael Bopp, Roscoe Jones, Jr., Vivek Mohan, Alexander Southwell, Amanda Neely, Daniel Smith, Frances Waldmann, and Sean Brennan, “Federal Policymakers’ Recent Actions Seek to Regulate AI,” Client Alert, Gibson Dunn, May 19, 2023, accessed July 26, 2023, https://www.gibsondunn.com/federal-policymakers-recent-actions-seek-to-regulate-ai/.

4 Artificial Intelligence: Private Governance Approaches

The US private sector, specifically the digital media technology industry, has in recent years been actively involved in developing voluntary AI principles and policies to guide their companies’ business decision-making. These companies and industry associations have built on a foundation of ethical best practices developed by other non-governmental organizations and professional groups. These include the Berkman Klein Center at Harvard University,62Jessica Fjeld and Adam Nagy, Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI, Berkman Klein Center for Internet & Society, Harvard University, 2020, accessed July 26, 2023, https://cyber.harvard.edu/ publication/2020/principled-ai. the Center for Law, Science, and Innovation at Arizona State,63Carlos Ignacio Gutierrez and Gary Marchant, “A Global Perspective of Soft Law Programs for the Governance of Artificial Intelligence,” Center for Law, Science and Innovation, Sandra Day O’Connor School of Law, Arizona State University, August 2021, https://lsi.asulaw.org/softlaw/wp-content/uploads/sites/7/2022/08/final-database-report-002-compressed.pdf. the Institute of Electrical and Electronics Engineers (IEEE),64IEEE Standards Association Statement of Intention: Our Role in Addressing Ethical Considerations of Autonomous and Intelligent Systems (A/IS), IEEE, March 29, 2018, accessed July 26, 2023, https://standards.ieee.org/wp-content/uploads/import/documents/other/ethical-considerations-ai-as-29mar2018.pdf. the International Organization for Standardization (ISO)65Elizabeth Gasiorowski-Denis, “To Ethicize or Not to Ethicize …,” ISO, November 11, 2019, accessed July 26, 2023, https://www.iso.org/news/ref2454.html. (including ISO/IEC 42001 [Standards for AI Management Systems] and ISO/IEC 23894 [AI Risk Management]), and more generally, the Association for Computing Machinery and British Standards Institution (BSI).

In October 2017, the Information Technology Industry Council (ITI), an industry association representing major tech companies such as Apple, Google (Alphabet), Microsoft, Amazon, and Meta, released the Artificial Intelligence Policy Principles (AI Policy Principles), a document addressing responsible and ethical AI development.66Juli Clover, “Industry Group Representing Apple and Google Releases AI Policy Principles,” MacRumors.com, October 25, 2017, accessed May 15, 2023, https://www.macrumors.com/2017/10/25/iti-apple-google-ai-policy-principles/. These AI Policy Principles include:

- Responsible design and development

- Safety and controllability

- Robust and representative data (responsible use and integrity of data)

- Interpretability (mitigating bias)

- Cybersecurity and privacy67Information Technology Industry Council, “AI Policy Principles,” October 2017, accessed May 15, 2023, https://www.itic.org/resources/AI-Policy-Principles-FullReport2.pdf.

In September 2019, the US Chamber of Commerce (Chamber), an apex cross-industry association, released ten AI policy principles crafted as a private governance document in collaboration with more than 50 companies representing all sizes and sectors of the American business community (the Chamber also endorses the OECD Recommendations on AI as a set of guidelines to advance its vision).68“U.S. Chamber Releases Artificial Intelligence Principles,” U.S. Chamber of Commerce, September 23, 2019, accessed May 15, 2023, https://www.uschamber.com/technology/us-chamber-releases-artificial-intelligence-principles. These ten Principles on Artificial Intelligence include:

- Recognize trustworthy AI is a partnership

- Be mindful of existing rules and regulations

- Adopt risk-based approaches to ai governance

- Support private and public investment in ai research and development

- Build an AI-ready workforce

- Promote open and accessible government data

- Pursue robust and flexible privacy regimes

- Advance intellectual property frameworks that protect and promote innovation

- Commit to cross-border data flows

- Abide by international standards69U.S. Chamber of Commerce, “U.S. Chamber of Commerce Principles on Artificial Intelligence,” 2019, accessed May 15, 2023, https://www.uschamber.com/assets/archived/images/chamber_ai_principles_-_general.pdf.

In the digital media industry, major companies (including Alphabet, Meta, and Microsoft) have in recent years issued explicit policies and/or principles of AI to be used in their business operations. Alphabet’s Google AI Principles include:

- Be socially beneficial

- Avoid creating or reinforcing unfair bias

- Be built and tested for safety

- Be accountable to people

- Incorporate privacy design principles

- Uphold high standards of scientific excellence

- Be made available for uses that accord with these principles70Google, 2022 Principles Progress Update, 2022, accessed May 15, 2023, https://ai.google/static/documents/ai-principles-2022-progress-update.pdf.

Meta’s Five Pillars of Responsible AI consist of

- Fairness and inclusion

- Robustness and safety

- Transparency and control

- Accountability and governance

- Collaborating on the future of responsible AI71Meta, “Facebook’s Five Pillars of Responsible AI,” June 22, 2021, accessed May 15, 2023, https://ai.meta.com/blog/facebooks-five-pillars-of-responsible-ai/.

Lastly, Microsoft has initiated its Microsoft Responsible AI Standard (v2), which consists of the following goals:

- Accountability

- Transparency

- Fairness

- Reliability and safety

- Privacy and security

- Inclusiveness72Microsoft, Microsoft Responsible AI Standard, v2, June 2022, accessed May 15, 2023, https://query.prod.cms.rt.microsoft.com/cms/api/am/binary/RE5cmFl.

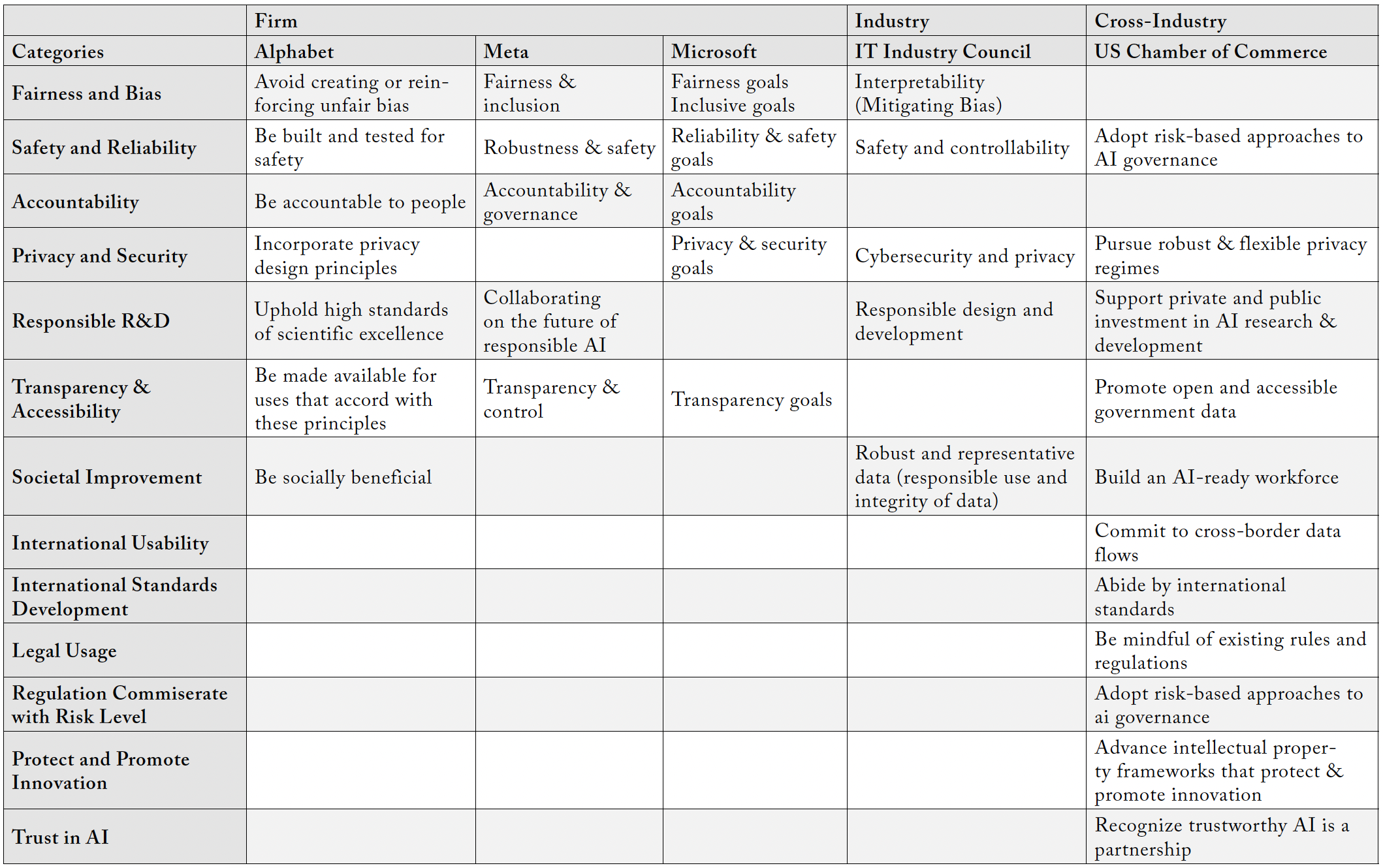

In table 2, Artificial Intelligence Policy Principles, again using an affinity diagram, an inventory of these five major firm-, industry-, and cross-industry examples of organizational AI policy principles with each AI policy principle categorized by thematic similarity across organizations (This is not a scientific sample, but for illustrative policy purposes, only).73The information utilized in table 2 “Artificial Intelligence Policy Principles: Firm, Industry, and Cross-Industry’” is drawn from organizational documents found at the following references: Information Technology Industry Council, “AI Policy Principles;” U.S. Chamber of Commerce, “Principles on Artificial Intelligence;” Google, 2022 Principles Progress Update; Meta, “Facebook’s Five Pillars of Responsible AI;” Microsoft, Microsoft Responsible AI Standard, v2.

Table 2. Artificial Intelligence Policy Principles: Firm, Industry, and Cross-Industry

What useful commonalities can be derived from table 2? It is readily apparent that private governance AI policy principles are focused on seven categories. For instance, the category of “Safety and Reliability” is universal among all five organizations. Following closely behind with four organizations are the categories of “Fairness and bias” (Alphabet, Microsoft, ITI, and the Chamber), “Privacy and security” (Alphabet, Microsoft, ITI, and the Chamber), “Responsible R&D” (Alphabet, Meta, ITI, and the Chamber), and “Transparency and accessibility” (Alphabet, Meta, Microsoft, and the Chamber). Lastly, are the two categories of “Accountability” (Alphabet, Meta, and Microsoft) and “Societal improvement” (Alphabet, ITI, and the Chamber), both of which include three organizations.

While Apple and Amazon are not represented in table 2 (as neither firm has made publicly available an explicit firm-level AI policy principles document), it should be noted that both firms are members of the Information Technology Information Council, whose “AI Policy Principles” include fairness and bias, safety and reliability, privacy and security, “responsible R&D, and societal improvement, five of the seven significant categories identified.74Information Technology Industry Council, “AI Policy Principles.”

5 Artificial Intelligence and Meta-Regulation

From the literature of science and technology studies (STS), insightful and valuable concepts can be drawn upon to support this paper’s policy analysis. These include an emphasis on the plurality of knowledge and assumptions that inform collective action concerning decisions about social control (i.e., regulation) and assessment of an emerging technology (e.g., AI) in American society.

For example, one STS concept, the Collingridge dilemma, creates a methodological quandary for technological assessment when considering the responsible governance of AI technological innovation.75David Collingridge, The Social Control of Technology, (New York: St. Martin’s Press, 1980, 19). According to Collingridge, “Attempting to control a technology is difficult … because during its early stages, when it can be controlled, not enough can be known about its harmful social consequences to warrant controlling its development; but by the time these consequences are apparent, control has become costly and slow.”76Collingridge, The Social Control of Technology.

Related to the Collingridge Dilemma is the Pacing Problem found in exercising technology assessment activities and designing and implementing a regulatory framework. The pacing problem, according to Thierer, “Refers to the quickening pace of technological developments and the inability of governments to keep up with those changes.”77Adam Thierer, “The Pacing Problem and the Future of Technology Regulation,” Mercatus Center at George Mason University, August 8, 2018, accessed May 22, 2023, https://www.mercatus.org/ economic-insights/expert-commentary/pacing-problem-and-future-technology-regulation. The pacing problem—at its core a knowledge deficiency problem—is based on the “law of disruption,” which Downes describes as how “technology changes exponentially, but social, economic, and legal systems change incrementally.”78Larry Downes, The Laws of Disruption: Harnessing the New Forces That Govern Life and Business in the Digital Age (Basic Books: New York: Basic Books, 2009, 2). Marchant explains the pacing problem in greater detail:

In contrast to this accelerating pace of technology, the legal frameworks that society relies on to regulate and manage emerging technologies have not evolved as rapidly, fueling concerns about a growing gap between the rate of technological change and management of that change through legal mechanisms.79Gary E. Marchant, “The Growing Gap Between Emerging Technologies and the Law,” in The Growing Gap Between Emerging Technologies and Legal-Ethical Oversight: The Pacing Problem, Gary E. Marchant, Braden R. Allenby, and Joseph R. Herkert, eds. (Springer: Dordrecht, 19–34, 2011, 19).

Further, there are three recognized technological innovation governance approaches in the STS literature, all of which have relevance to the research question posed in this paper: (1) the precautionary principle, (2) responsible innovation, and (3) permissionless innovation.

First, emerging at end of the 20th century, the precautionary principle (PP) was defined (and endorsed) by the United Nations Educational, Scientific and Cultural Organization’s (UNESCO) World Commission on the Ethics of Scientific Knowledge and Technology (COMEST), as “when human activities may lead to morally unacceptable harm that is scientifically plausible but uncertain, actions shall be taken to avoid or diminish that harm.”80World Commission on the Ethics of Scientific Knowledge and Technology, The Precautionary Principle (United Nations Educational, Scientific and Cultural Organization: New York, 2005). The precautionary principle is often used by policymakers to introduce government regulation where there is the possibility of “serious harm to human health or the environment”81Steven G. Gilbert “Lessons Learned: Looking Back to Go Forward,” Collaborative for Health & Environment (CHE), accessed July 29, 2023, https://www.healthandenvironment.org/environmental-health/social-context/history/precautionary-principle-the-wingspread-statement. resulting from the implementation of a certain policy decision, but conclusive evidence of the adverse effects of the decision is not yet available.

Second, responsible innovation was formally defined by Von Schomberg as:

… a transparent, interactive process (emphasis added) by which societal actors and innovators become mutually responsive to each other with a view to the (ethical) acceptability, sustainability and societal desirability of the innovation process and its marketable products (in order to allow a proper embedding of scientific and technological advances in our society).82Renee Von Schomberg, “Prospects for Technology Assessment in a Framework of Responsible Research and Innovation,” in Marc Dusseldorp and Richard Beecroft, eds., Technikfolgen abschätzen lehren: Bildungspotenziale transdisziplinärer Methoden. Wiesbaden: VS Verlag für Sozialwissenschaften: 2011, 39–61.

Of more recent vintage, and offered from a policy process perspective, Valdivia and Guston recognize that responsible innovation

… considers innovation inherent to democratic life and recognizes the role of innovation in the social order and prosperity. It also recognizes that at any point in time, innovation and society can evolve down several paths and the path forward is to have to some extent open to collective choice. What RI [responsible innovation] pursues is a governance of innovation where that choice is more consonant with democratic principles. … RI … appreciates the power of free markets in organizing innovation and realizing social expectations but … [is also] self-conscious about the social costs that markets do not internalize.83Walter D. Valdivia and David H. Guston, Responsible Innovation: A Primer for Policymakers, (Brookings Institution Center for Technology Innovation: Washington, DC, 2–3).

Third, Thierer explains the notion of permissionless innovation:

… experimentation with new technologies and business models should generally be permitted by default. Unless a compelling case can be made that a new invention will bring serious harm to society, innovation should be allowed to continue unabated and problems, if any develop, can be addressed later.84Adam Thierer, Permissionless Innovation: The Continuing Case for Comprehensive Technological Freedom (revised edition), Mercatus Center at George Mason University: Arlington, VA, 2016, 1.

Thierer also notes that, unlike the precautionary principle, permissionless innovation switches the burden of proof to opponents of technological change by asserting five fundamental attributes associated with it:

- Technological innovation is the single most important determinant of long-term human well-being.

- There is real value to learning through continued trial-and-error experimentation, resiliency, and ongoing adaptation to technological change.

- Constraints on new innovation should be the last resort, not the first. Innovation should be innocent until proven guilty.

- When regulatory interventions are considered, policy should be based on evidence of concrete potential harm and not fear of worst-case hypotheticals.

Finally, where policy interventions are deemed needed, flexible, bottom-up solutions of an ex-post (responsive) nature are almost always preferable to rigid, top-down controls of an ex-ante (anticipatory nature).85Adam Thierer, “Does ‘Permissionless Innovation’ Even Mean Anything?” Fifth Annual Conference on Governance of Emerging Technologies: Law, Policy & Ethics, Arizona State University, Phoenix, AZ, May 18, 2017.

The concept of the nonmarket issue life cycle (life cycle) is a useful analytic concept to identify where a public issue is located in the public policy environment. The life cycle consists of a public issue progressing through five stages of maturity:

- issue identification

- interest group formation

- legislation

- administration

- enforcement86David P. Baron, Business and Its Environment (7th edition) (Boston: Pearson, 2013).

As Baron notes: “[A]s the impact [of the issue] increases, [firm] management’s range of discretion addressing the issue correspondingly decreases.”87Baron, Business and Its Environment, 14. In the case of AI, as noted by the recent activities in the US public policy arena, it is apparent that some form of federal legislation (stage 3 in the nonmarket issue life cycle) is poised to emerge in the 118th Congress addressing a policy direction for a regulatory framework of some form of social control of ANI technology.

Likewise, given the issues associated with the pacing problem and the limits of human knowledge (e.g., concerning what is known and unknown), the question of how to effectively and efficiently regulate AI to socially control this emerging technology while capturing its evolutionary benefits is the “existential” challenge before American society. The precautionary principle has the inherent problem of attempting to pre-emptively eliminate all or most hypothetical risk from emerging technologies—a potentially significant barrier to encouraging technological innovation for the benefit of American society. Likewise, permissionless innovation, as Thierer notes, only favors public regulatory approaches to a new technological innovation, such as AI, based on the actual evidence of potential harm to society.88Adam Thierer, “Flexible, Pro-Innovation Governance Strategies for Artificial Intelligence,” R Street Policy Study, No. 283, April 2023, https://www.rstreet.org/wp-content/uploads/2023/04/Final_Study283.pdf.

The permissionless innovation governance approach, as seen in the recent congressional fact-finding inquiries and legislative proposals (also based on evidence of actual “harms” from existing ANI activities), is no longer considered a serious public policy consideration for ANI regulatory frameworks.89Darrell M. West, “The End of Permissionless Innovation,” The Brookings Institution, October 7, 2020, accessed July 25, 2023, https://www.brookings.edu/articles/the-end-of-permissionless-innovation/. Even Thierer, who is a longtime advocate, recognizes the importance of soft law complementing hard law approaches to AI risk management in order to address actual evidence of ANI harms.90Thierer, “Flexible, Pro-Innovation Governance Strategies for Artificial Intelligence;” Gutierrez and Marchant, “A Global Perspective of Soft Law Programs,” 33. However, the responsible innovation approach, shares conceptually more in common with permissionless innovation than the precautionary principle.91Thomas A. Hemphill. “The Innovation Governance Dilemma: 21st Century Governance Alternatives to the Precautionary Principle,” Technology in Society 63: 2020, https://doi.org/10.1016/j.techsoc.2020.101381. It is congruent with present AI policy efforts being considered by Congress and the executive branch, reflecting what Valdivia and Guston characterize as “collective choice” along with “democratic principles.”92Valdivia and Guston, Responsible Innovation.

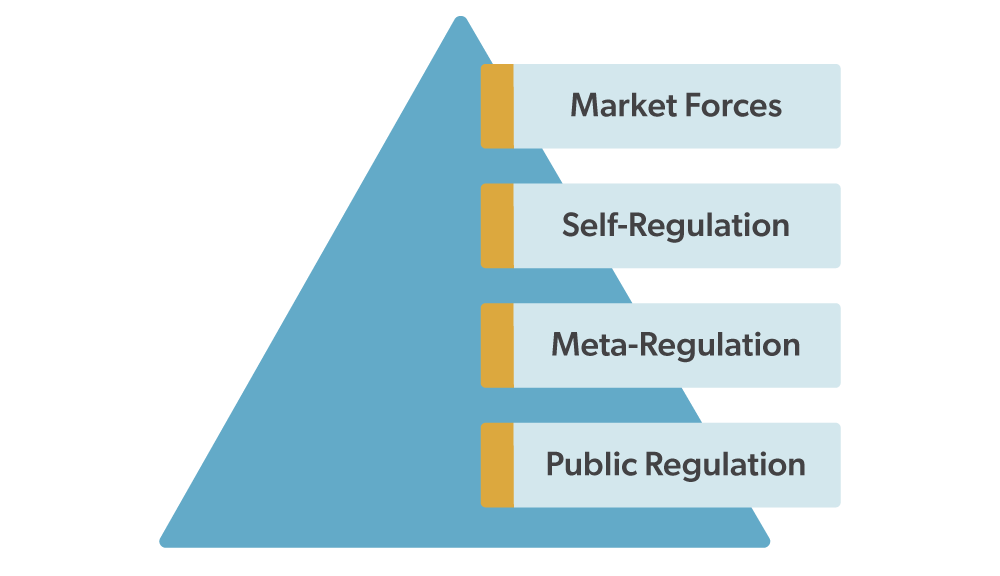

There is a body of public thought and policy tools available to help US policymakers and regulators effectively and efficiently design an AI policy path forward. Figure II illustrates the Regulatory Pyramid, which offers a broader policy-focused approach to addressing the national AI regulatory/governance challenge. This is in contrast to the specific focus of regulatory forms listed in the Industry Regulatory Spectrum (figure I). Within the Regulatory Spectrum, the category of market forces is no longer being considered a stand-alone form of social control over AI in the US.

Private AI governance in the form of self-regulation has been developed and used by US-based industry associations and private firms over the last few years. Public regulation is now being proactively discussed in the 118th Congress. However, given the issues related to pacing and dynamically emerging ANI technology, as well as the importance of responsible innovation as an effective conceptual approach to the governance of AI, meta-regulation offers the most realistic and practical approach to governing the future commercial development and implementation of AI.

Figure 2. Regulatory Pyramid: Free Market Regulation

Governmental Command-and-Control Regulation

Source: Ian I. Ayres and John Braithwaite, Responsive Regulation: Transcending the Deregulation Debate, (Oxford University Press: Oxford, 1992).

Meta-regulation, as defined broadly by Grabowski, includes “activities occurring in a wider regulatory space, under the auspices of a variety of institutions, including the state, the private sector, and public interest groups.”93Peter Grabowski, “Chapter 9: Meta-Regulation,” in Regulatory Theory: Foundations and Applications, Peter Drahos, ed. (ANU Press: Canberra, 2017, 149–162). Meta-regulation, says Coglianese and Mendelson, specifically “refers to ways that outside regulators deliberately—rather than unintentionally—seek to induce targets to develop their own internal, self-regulatory responses to public problems.”94Cary Coglianese and Evan Mendelson, “Chapter 8: Meta-Regulation and Self-Regulation,” in The Oxford Handbook of Regulation, Robert Baldwin, Martin Cave, and Martin Lodge, eds. (Oxford University Press: Oxford, 2010, 146–168), https://doi.org/10.1093/oxfordhb/9780199560219.003.0001. As Taylor notes in relation to his research on AI, “It is useful not to speak about a ‘policy’ but about the ‘policy space’ Otherwise, there is risk that the basket of policy alternatives and tools is conceived too narrowly.”95Richard D. Taylor, “Quantum Artificial Intelligence: A ‘Precautionary’ U.S. Approach?” Telecommunications Policy 44, no.6, July 1, 2020: 1–12 (emphasis added). Thus, public regulation (hard law) is complemented by self-regulation (soft law), resulting in meta-regultion policy “space” being the most effective and efficient governance framework designed for AI technology commercialization.

Within the Industry Regulatory Spectrum (figure 1), the specific forms of regulation qualifying as meta-regulation include self-regulation plus non-governmental participation, self-regulation plus governmental participation, self-regulation plus governmental policing of deceptive practices, and self-regulation plus an autonomous government agency with rule-making authority. In addition, in the case of ANI, there are also be ex post statutes that can be enforced against specific illegal business behavior.96Thierer, “Flexible, Pro-Innovation Governance Strategies for Artificial Intelligence,” 34–36. These industry regulatory policy approaches are later referred to in the subsequent recommended legislative policy proposal.

An example of industry meta-regulation is the Financial Industry Regulatory Authority (FINRA), a government-authorized, not-for-profit organization funded through membership fees and fines that oversees US broker-dealers.97“What We Do,” About FINRA, Financial Industry Regulatory Authority (FINRA), accessed July 28, 2023, https://www.finra.org/about/what-we-do.

FINRA undertakes these efforts to protect the investing public against fraud and bad industry practices.98“What We Do,” FINRA. Its board of governors is composed of 22 industry and public members.99“Governance,” About FINRA, Financial Industry Regulatory Authority (FINRA), accessed July 28, 2023, https://www.finra.org/about/governance. FINRA qualifies as a “self-regulation plus governmental participation” form of meta-regulation, which includes its quasi–law enforcement authority to sanction (through enforcing rules) and to audit firms (for compliance with its rules). However, there is criticism of FINRA’s performance and the meta-regulatory approach in general. “Due process, transparency and regulatory-review protections normally associated with regulators are not present, and its arbitration process is flawed. Reforms are necessary,” writes David Burton, a senior fellow in economic policy at the Heritage Foundation, in a policy paper focused on reforming FINRA.100David R. Burton, “Reforming FINRA,” Backgrounder, no. 3181, The Heritage Foundation, February 1, 2017, 11, https://www.heritage.org/sites/default/ files/2017-02/BG3181.pdf.

The essential characteristics of any regulatory or governance approach—including meta-regulation—involves four distinct components useful for AI policy analysis: target, regulator, command, and consequences.101Coglianese and Mendelson, “Chapter 8: Meta-Regulation and Self-Regulation,” 148. The target “is the entity to which the regulation applies and upon whom the consequences are imposed.”102Coglianese and Mendelson, “Chapter 8: Meta-Regulation and Self-Regulation,” 148. Traditionally, this entity would be an organization, such as a firm, an individual, government, rot non-profit. However, in the case of ANI, the targets are the applications of the general purpose technology, eg., employment hiring, credit approval, etc.

The regulator “is the entity that creates and enforces the rule or regulation,” including governmental and non-governmental standard-setting bodies or industry trade associations.103Coglianese and Mendelson, “Chapter 8: Meta-Regulation and Self-Regulation,” 148. Within a meta-regulation ANI governance approach, regulators include federal agencies, industry standard-setting associations, individual corporations, and relevant non-governmental stakeholders.

The command “refers to what the regulator instructs the target to do or to refrain from doing. Commands can either specify means or ends.”104Coglianese and Mendelson, “Chapter 8: Meta-Regulation and Self-Regulation,” 148. Means commands—technology design, or specification standards—mandate or prohibit the taking of a specific action. Ends commands, called performance standards, “do not require any particular means, but instead direct the target to achieve (or avoid) a specified outcome related to the regulatory goals.”105Coglianese and Mendelson, “Chapter 8: Meta-Regulation and Self-Regulation,” 148.

The federal public governance AI environment consists of a range of voluntary and advisory AI guidance documents (including “means” and “ends” recommendations) for firms and industries issued by the Department of State, Department of Defense, White House Office of Science and Technology, National Institute of Standards and Technology, National Science Foundation, and the National Telecommunications and Information Administration. Likewise, US.-based private governance approaches developing and implementing AI policy principles reveal examples of such statements at various organizational levels, including the firm (Alphabet, Meta, and Microsoft), industry (ITI), and cross-industry (US Chamber of Commerce).

What scope of commands is missing? In general, a lack of a coherent, national AI policy framework that both recognizes the limits of public regulation and the necessity for private governance and industry self-regulation, and that ensures the product development and innovative commercial implementation of AI in the future.

As mentioned earlier, there are two camps on the subject of AI: one wanting immediate legislation that establishes national rules for AI implementation, and a second wanting a go slow approach, learning more about AI technology, and eventually offering an informed legislative response that doesn’t inhibit technological innovation and commercial success. However, there is a growing body of knowledge available on existing ANI performance in the commercial sector of the economy, and an opportunity for congressional leadership to establish a meta-regulatory outline of an ANI framework for future commercial implementation.

Proposed congressional legislation on AI development and implementation could include the following provisions:

- Formally endorse a framework of AI policy principles. There has been a convergence among stakeholders to AI development and implementation on what constitutes a body of core governance principles. The White House’s OSTP has identified the following five AI principles: “safe and effective systems, algorithim discrimination protection, data privacy, notice and explanation, and human alternatives, consideration, and fallback.”106“Blueprint for an AI Bill of Rights” The White House. Likewise, this paper uncovered seven such AI categories of principles from private sector, self-regulation efforts: fairness and bias, safety and reliability, accountability, privacy and security, responsible R&D, transparency and accountability, and societal improvement. Moreover, a 2019 global survey of organizations analyzing 84 AI ethical frameworks found results converging around the following five principles: transparency, justice and fairness, non-malficence, responsibility, and privacy.107Anna Jobin, Marcello Ienca, and Effy Vayena, “The Global Landscape of AI Ethics Guidelines,” Nature Machine Intelligence 1, no. 9, September 2019: 389–399 https://doi.org/10.1038/s42256-019-0088-2. At this time, Congress is the ideal democratic, deliberative body to identify, designate and endorse a core framework of distinct, responsible AI principles useful as a definitive baseline departure point for future AI meta-regulation policy efforts. Over time, and through accumulated learning experience, such a framework can be legislatively amended.This formal recognition is not only helpful for national meta-regulatory institutional efforts, but will also be a departure point for similar developments in standards harmonization in the international environment – including future updates to the OECD’s Recommendations on Artificial Intelligence, for example. This meta-regulation provision is indicative of the regulatory form, self-regulation plus governmental participation, found in the Industry Regulatory Spectrum.

- Expand the role of the NAIAC to encourage widespread responsible adoption of AI across the federal government, as well as in the non-governmental and private sectors. To some extent, the NIST has been playing a lead role for many years, culminating in its important release of the AI RMF early in 2023. Those efforts, and the NIST’s ongoing AI Playbook process, should constitute the heart of the National Artificial Intelligence Advisory Committee (NAIAC).As others have recommended, NIST could also work with NTIA (as they are both located within the US Department of Commerce) in a more formal way to actively engage relevant stakeholders to develop contextually tailored, voluntary “best practices.”108Adam Thierer, “Comments of the R Street Institute to the National Telecommunications and Information Administration (NTIA) on AI Accountability Policy,” R Street Institute, June 9, 2023, accessed July 25, 2023, https://www.rstreet.org/outreach/comments-of-the-r-street-institute-to-the-national-telecommunications-and-information-administration-ntia-on-ai-accountability-policy/. In a formal, institutional setting, however, federal government efforts to identify and develop AI guidelines have generally been single-agency oriented—although it has included an example of multi-agency projects as legislatively required in the National AI Initiative Act of 2020.In April 2022, as required by the National AI Initiative Act of 2020 (Initiative), the NAIAC was officially launched.109“About the Advisory Committee,” National AI Advisory Committee (NAIAC), accessed July 29, 2023, https://www.ai.gov/naiac/. Congress has directed the NAIAC to provide:

Recommendations on topics including the current state of US AI competitiveness; progress in implementing the Initiative; the state of science around AI; issues related to AI workforce; how to leverage Initiative resources; the need to update the Initiative; the balance of activities and funding across the Initiative; the adequacy of the National AI R&D strategic plan; management, coordination, and activities of the Initiative; adequacy of addressing societal issues; opportunities for international cooperation; issues related to accountability and legal rights; and how AI can enhance opportunities for diverse geographic regions.110“About the Advisory Committee,” National AI Advisory Committee (NAIAC).

By explicitly amending the National AI Initiative Act of 2022, the role of the NAIAC could be formally expanded to encourage and monitor widespread responsible adoption of AI across the federal government, as well as in the non-governmental and private sectors. The NAIAC will be charged with coordinating, encouraging and facilitating emerging governance ideas and meta-regulation best practices found among AI practitioners—an apex role best suited for the public sector.

An annual “state-of-the technology” report should be provided to Congress by the NAIAC (in contrast to the triennial report required under the existing legislation),111“About the Advisory Committee,” National AI Advisory Committee (NAIAC). thus allowing for a timely legislative response needed to address any public regulatory issues arising. This meta-regulation provision is indicative of the regulatory forms of self-regulation plus non-governmental participation and self-regulation plus governmental participation found in the Industry Regulatory Spectrum.

Moreover, the use of a sunset law, also known as a sunset provision, is a law that automatically terminates an agency, a law, or a government program that fails to procure legislature approval beyond a fixed period of time.112“Sunset Law,” Legal Information Institute, Cornell Law School, November 2021, accessed July 28, 2023, https://www.law.cornell.edu/wex/sunset_law. This could be a legal instrument for updating AI legislation every three years. An example of a sunset law is H.R. 302 (P.L. 115-254), the FAA Reauthorization Act of 2018, which was signed into law on October 5, 2018. It extended the FAA’s funding and authorities through fiscal year 2023.113“FAA Reauthorization,” Federal Aviation Administration, accessed July 28, 2023, https://www.faa.gov/about/reauthorization.

- Designate industry–level standards–setting organizations and affected stakeholder representation as active participants in the AI development and implementation committee. The self-regulation component of non-governmental and private governance is required for effective and efficient meta-regulation to flourish in the public and private sectors, and consequently across American society. The inclusion of representative, industry-level, standards-setting organizations is an essential component for this committee’s long-term success. The makeup of this committee would be critical, as it will need to keep abreast of developments in a general-purpose technology (i.e., being used and developed across a wide-spectrum of industries), which by its very nature necessitates that the technology be malleable and adapt to each industry.As this ANI technology is often a public facing technology, the market demand for adaptation and iterative improvements would exert forces on AI technology development used in the various industries. These factors combine to demonstrate that a committee designated to oversee this technology must be flexible, adaptable, and ready to quickly shift directions as the technology is rapidly developed in unexpected directions.This dynamic pace suggests that a traditional legislative approach, which is often marred with partisan divides and dysfunction, is inadequate to address ANI regulation and that the aforementioned multi-industry and stakeholder representative committee will be best equipped to keep pace with the technology utilizing self-regulation. This meta-regulation provision is indicative of both the regulatory forms, self-regulation plus non-governmental participation and self-regulation plus governmental participation, found in the Industry Regulatory Spectrum.

- Encourage soft law approaches when considering a meta-regulatory approach. By its nature, soft law is necessary for rapid adaptation to regulatory responses to an emerging technology, in this case, ANI.114Ryan Hagemann, Jennifer Huddleston, and Adam D. Thierer, “Soft Law for Hard Problems: The Governance of Emerging Technologies in an Uncertain Future,” Colorado Technology Law Journal, April 24, 2019, https://ssrn.com/abstract=3118539. Eggers, Turley, and Kishnani offer a set of five regulatory approaches to assist AI regulators in efforts to best meet the temporal needs of ANI, the immediate subject of American societal concerns. All of these approaches can incorporate decentralized soft law into an ANI meta-regulatory framework:

-

- Adaptive regulation: Shift from “regulate and forget” to a responsive, iterative approach.

- Regulatory sandboxes: Prototype and test new approaches by creating institutional sandboxes and accelerative mechanisms.

- Outcome-based regulation: Focus on results and performances rather than form.

- Risk-weighted regulation: Move from one-size-fits-all regulation to a data-driven, segmented approach.

- Collaborative regulation: Align regulation nationally and internationally by engaging a broader set of players across the ecosystem.115William D. Eggers, Mike Turley, and Pankaj K. Kishnani, “The Future of Regulation: Principles for Regulating Emerging Technologies,” Deloitte Insights, June 19, 2018, accessed May 27, 2023, https://www2.deloitte.com/us/en/insights/ industry/public-sector/future-of-regulation/regulating-emerging-technology.

Thierer identifies a list of soft law, market-driven, private sector activities useful for ANI governance consideration, including:

Insurance markets, which serve as risk calibrators and correctional mechanisms; social norms and reputational effects, especially the growing importance of reputational feedback mechanisms; societal pressure and advocacy from media, academic institutions, nonprofit advocacy groups, and the general public, all of which can put pressure on technology developers; and ongoing innovation and competition within markets.116Thierer, “Flexible, Pro-Innovation Governance Strategies for Artificial Intelligence,” 11. This meta-regulation provision is indicative of the regulatory form, self-regulation plus non-governmental participation found in the Industry Regulatory Spectrum.

The body of existing federal and state statutes useful for ANI risk mitigation has not been fully explored and identified. This would be a useful project to assist stakeholders (exercised through a comprehensive committee disclosure strategy) in knowing what legal recourse may be available to them should they want to address a grievance. This meta-regulation provision is indicative of the regulatory form, self-regulation plus an autonomous government agency with rule-making authority, found in the Industry Regulatory Spectrum.

- Investigate and identify the inventory of existing federal ex-post hard law available for enforcing ANI violations and assisting in mitigating risk. Thierer has offered an excellent introduction to an existing hard law complement to soft law meta-regulatory approaches, including federal and state consumer protection statutes. These may include, for example, statutes enforced by the Federal Trade Commission’s Division of Advertising Practices; federal product recall authority enforced by the Consumer Product Safety Commission; automated vehicle policy and regulations as enforced by the National Highway Transportation and Safety Administration; small, unmanned aircraft systems (i.e., drones), enforced by the Federal Aviation Administration; mobile phone privacy disclosures and mobile applications for children, enforced by the Federal Communications Commission; and 3D-printed medical devices, enforced by the Food and Drug Administration; common law authority (i.e., civil remedies enforced through product liability law); property and contract law; insurance and other accident-compensation mechanisms; and other existing statutes and agencies—even the Civil Rights Act, for legally enforcing potential algorithmic bias and discrimination.117Thierer, “Flexible, Pro-Innovation Governance Strategies for Artificial Intelligence” 33–36.

6 Discussion

In the introduction to the paper, the following research question was posed: What kind of governance framework would best manage AI risks and promote its benefits for American society? The answer: Embrace a flexible and adaptable meta-regulation approach for addressing social control of a still emerging technology, while encouraging the potential commercial benefits accruing from future AI technological innovation through private governance initiatives.

American society has an important stake in the ongoing development and implementation of ANI across industries. The limitations of public regulation and the pacing problem of this technology make it vital that regulating authority be able to pivot with innovations, which legislatures don’t do well.

The US government and AI stakeholders can learn valuable lessons for meta-regulation from the development and implementation experiences of the AI Act.

The overall intention of the AI Act was to balance AI regulation with safety considerations, the rights of citizens, business interests, and technological innovation.118Thierer, “Flexible, Pro-Innovation Governance Strategies for Artificial Intelligence” 33–36. However, its regulatory approach has numerous flaws. First, the issue of model transparency and evaluation arises when considering how, for example, ChatGPT is used in the case medical applications versus for entertainment purposes. Second, when it comes to dataset construction and training methods, for example, the AI Act does not consider these downstream uses of models. Third, the AI Act does not currently address the important issue of model access, which allows AI researchers to assess and evaluate these AI models.119Shana Lynch, “Analyzing the European Union AI Act: What Works, What Needs Improvement,” Human-Centered Artificial Intelligence, Stanford University, June 21, 2023, accessed July 29, 2023, https://hai.stanford.edu/news/analyzing-european-union-ai-act-what-works-what-needs-improvement.

In addition, legal transparency clarification and data set, model, and process documentation are missing. Access to systems to design safeguards and conduct evaluations, whether in an open or closed source model, is still needed by researchers, and standards to evaluate bias in language models, for example, are not included in the AI Act.120Shana Lynch, “Analyzing the European Union AI Act.”

Congress should recognize that private governance is a major component of addressing basic issues related to the future of AI development and implementation across industries. Each industry will have unique issues related to commercializing ANI, and therefore will be in the ideal position to know the best practices to be instantiated in their standard-setting processes. What is crucial for American consumers is that there are effective, operational policies delineating ANI practices and performance, and clear, consumer accessible information disclosure on how well the firm is abiding by these industry ANI best practices.